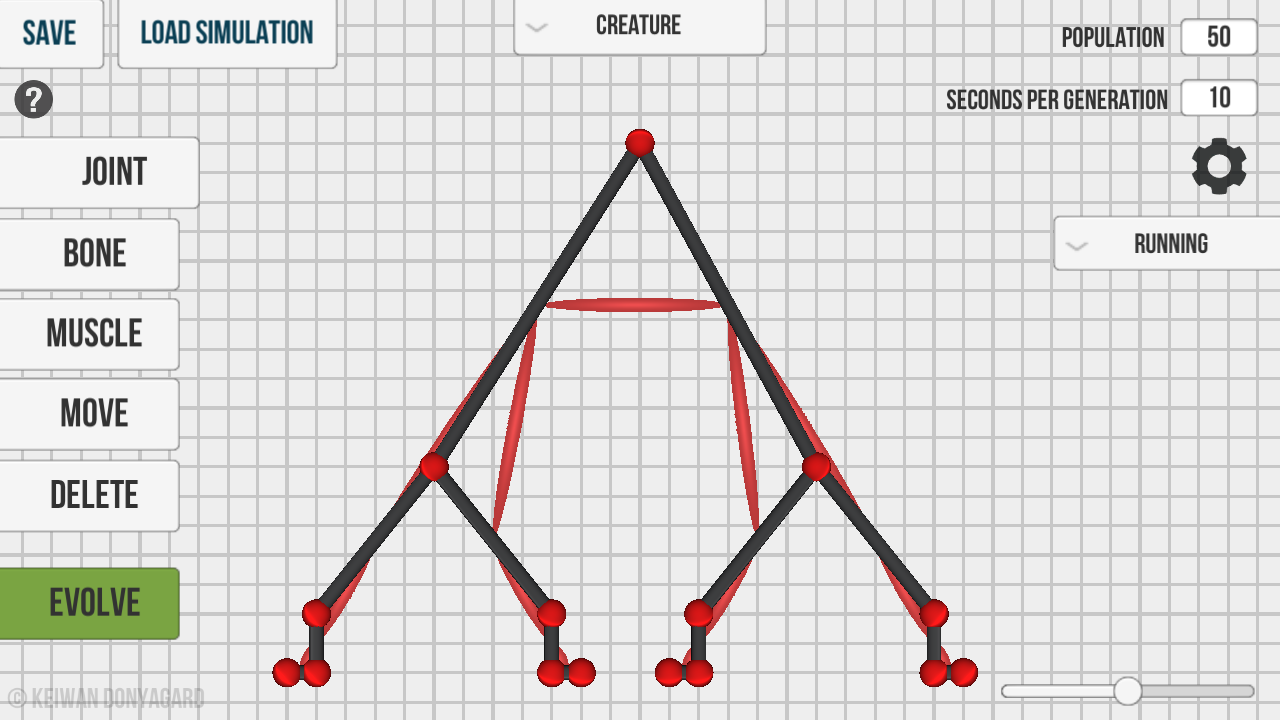

Would be nice to be able to select multiple pieces together and move them as a unit. Perhaps a rectangular drag-to-select type arrangement, such that assembled "parts" could be moved around in relation to other parts. Moving things around one joint at a time is pretty tedious and at certain levels of complexity it can be almost impossible to move something you've already made.

An 'undo' button that reverses the last change would be a big help as well. I've completely ruined a creature by deleting the wrong bundle of bones & muscle.