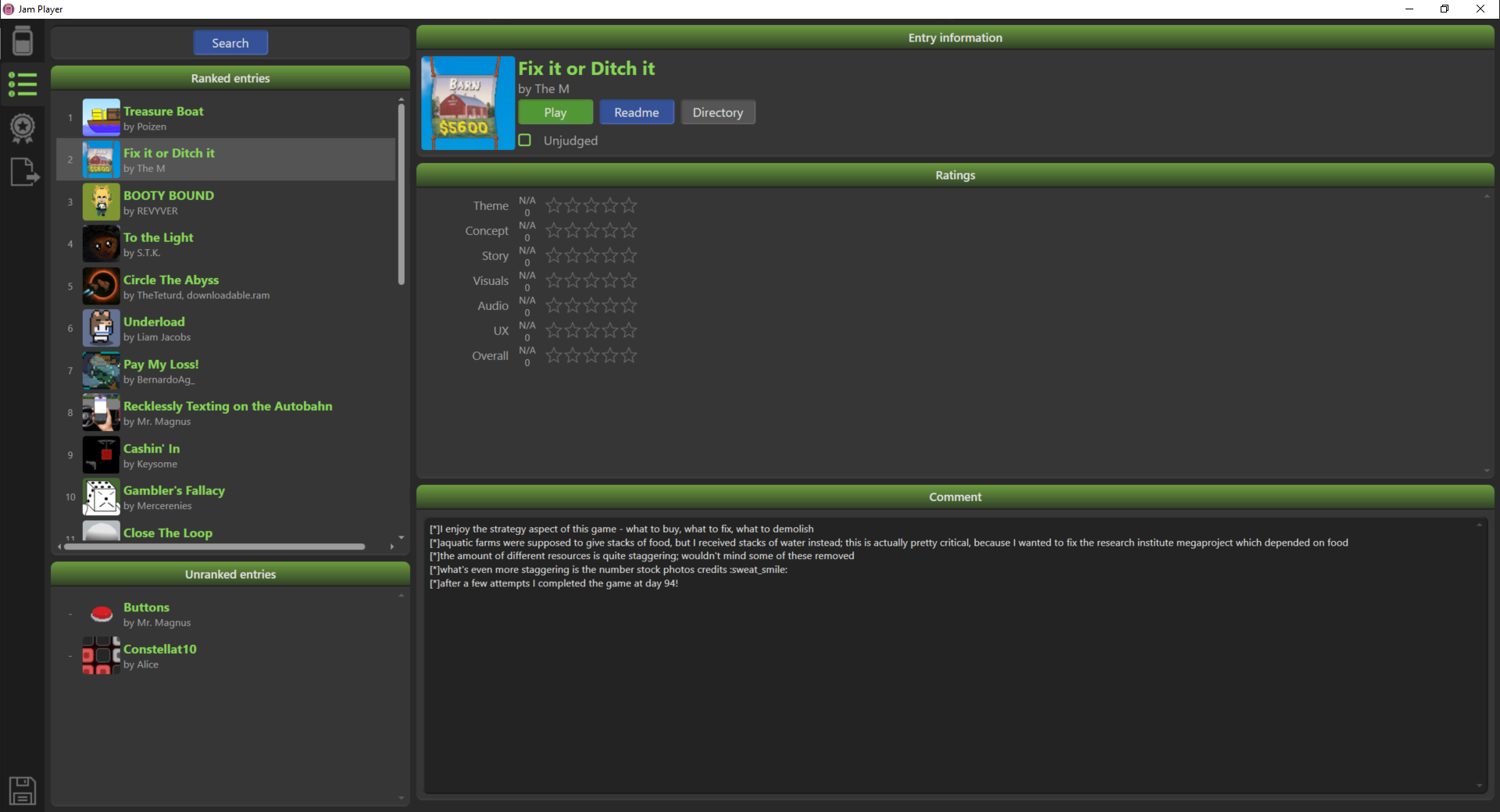

On the repository I linked, there's a separate Jam Packager project, that handles editing and saving all the relevant metadata (also some quality of life features like automatically unzipping all the ZIPs of the downloaded entries). Once you save the metadata, you can open the Jam Player from within the folder which has "jam.jaminfo" file and it'll show the entries correctly.

Alice

Creator of

Recent community posts

It's because the tool has been made in Windows Presentation Foundation, which is a .NET UI framework targeting Windows specifically. It's been good enough for now since the jams it's used for require a Windows or web build anyway.

I plan to port it over to Avalonia, which is more cross-platform, but it will take quite a lot of effort, testing and tinkering before then. Since it's currently a one-person side project, I haven't been able to make the shift yet.

Yeah, Tuesday would be fine. At least participants would get two weekends' worth instead of one, so they could knock quite a few games down back then. And yes, I know in itch.io jams you don't need to rate games, but I prefer to play a whole bunch of them if time permits. ^^

Speaking of rating queues: does itch.io have system for these in the first place? Either way, to support rating games semi-evenly, I think the Jam Player I mentioned in another thread might come in handy, although it handles only Windows and web games. But also, if a game is Linux-only or Mac-only, then people who are on Windows to begin with wouldn't be able to judge these anyway. If you're interested in using Jam Player for this jam and think there are some relatively small features that could be implemented to make this jam easier, feel free to let me know. I can also gather the entries after the jam and prepare a ZIP, hopefully it won't take more than a day.

So, here's a little project I've been maintaining for a while, that being the Jam Player. It's a launcher that allows playing and reviewing locally downloaded or web-linked games without much friction and keeping the ratings and reviews in one place. Currently it's Windows-only, and can run Windows Executable and open webpages; I'd like to eventually make it based on Avalonia so it's cross-platform, but it's ways away for now. At this moment, it might not be quite feasible for jams on the scale of Ludum Dare, but it might be manageable for a jam with tens of entries, or even over a hundred. Especially if all the downloaded games amount to no more than a gigabyte's worth.

You can find the repository with Jam Player and related tools here: https://github.com/Alphish/game-jam-tools

And here's what it looks like in practice:

It was designed for a GameMaker Community Jam, which is hosted on forum software. That informed what kinds of features are available and how they're put together (including an "export to a voting post" option). Not all of these apply to itch.io hosted jam, but they can very well be skipped. Unfortunately, it doesn't have itch.io integration or similar bells and whistles; it would be really handy if you could authenticate somehow and send comments and reviews from the app, but at the moment I don't know if an API for that even exists.

Still, I think with a few quick features (like opening the game's itch.io page) it could make the playing and reviewing process so much smoother, leading to more ratings and feedback being given. Plus, with the order of games presented being randomised (if you don't specifically search for individual entries) some participants may get a bunch of reviews without having to beg for them (which is an aspect of larger jams I find pretty bothersome).

If it turns out the number and downloaded volume of entries is reasonable, I'm willing to download them the day after the jam ends and put together the ZIP with the Jam Player included. It might not work for Linux-only or Mac-only entries, but I think it will still cover most of them. What do you all think?

By the way, here's an ongoing voting with the Jam Player integrated, if someone wants to try it out: https://forum.gamemaker.io/index.php?threads/the-pickled-gmc-jam-56-voting-topic...

Might be good to fix this:

- Start: Friday, July 11, 2025 @ 18:00 UTC

- Submission Deadline: Wednesday, July 18, 2025 @ 23:59 UTC

- Voting Ends: Wednesday, July 25, 2025 @ 23:59 UTC

Because July 18 and July 25 are very much not Wednesdays. (I hope both are meant to be Fridays for 7 day total jam, rather than Wednesdays for a shorter total time)

Also also, I'd suggest a voting period of at least two weeks; even if half of currently declared people submitted an entry, that would make for over 40 entries to play over a week, which is tricky if you don't have much free time. And even if for some people 2 weeks won't be enough, it should still result in individual people's more complete voting, which means more feedback for everyone and somewhat fairer results.

I got some Undertale vibes, especially from the overworld (might be the bright path that resembles similar puzzle from UT) and also the battle backgrounds really make me think of Earthbound (even if I haven't actually played it).

Balancing between the dodging and inputting the correct elements might be good as a general concept, but I feel the execution here doesn't quite work? Might have something to do with enemy attacks being kinda wobbly and hard to tell the direction of to the point I couldn't quite react in time, and quickly making elemental attacks was effective enough I didn't really need to dodge in the first place. Undertale's system of having attack/action sections and dodging sections works better in that the player can focus on one thing at a time.

Other than that, the game was pretty short and sweet. The overall story reminded me of Homunculipse, if you played the game you may notice why. Nicely done, overall. ^^

I completed the game, and now the blob of non-matter is sleeping soundly... didn't it forget about Polwan, though?

I won't dwell over jump delay and lack of audio, as I'm sure you already heard about it plenty of times. One thing I wonder about is whether elemental input could be simplified somewhat. One idea would be using the same key to apply elements, just picking whichever is applicable at themoment. But of course it would cause trouble with overlapping element-dependent items.

One idea that comes to mind is using approach like in Fury of the Furries, where - if you stood still on the ground - you could enter ability switching mode and then exit with whatever ability you need at the moment. Then you could make the player use the ability freely (rather than at the designated spots) and have them figure out what works where. Of course, it'd likely require redesigning gameplay elements, though it may be for the better; the current objects were mostly "railroady" in their use, even the multi-element ones like cauldrons.

I think the game does a good job presenting its setting; the story in description is one thing, but also I saw all these posters/graffitis and such. I think focusing on the setting and storytelling as well as making creative bosses themed after specific kinds of alchemy could work really well for this game.

Overall, I enjoyed the demo, though I feel the gameplay elements and/or level design could be reworked to allow a greater variety of interactions (rather than boiling down to "I see wind, I use air, I see pipe, I use water, I see wood, I use fire").

Thanks a lot for the review, and I'm glad you enjoyed it so much!

Just so you know, you should be able to open the save after completing the last level. If you avoid the crash (don't leave the last puzzle room, goto towerentrance instead!), you may even see the sky change to the nightly-purplish in relevant areas. ^^

I don't think I'll change graphics much for the immediate post-jam version - maybe some tweaks here and there or additional elements. I'd focus on deploying the softlock/crash fix, including some quality of life changes, making a few new levels, adding the missing sound effects and hopefully include the tracks for other levels of progression. I might make some kind of remastered version later on, with somewhat larger scope and hopefully refreshed graphics. We'll see...

Nice concept, although I would prefer if the light-side form had its own abilities the shadow form doesn't have, and then have the shadow fulfill its own conditions for a transformation. I think in the long run, it could open up opportunities for some interesting gameplay choices - which heart-pieces to collect, when to perform the transformation and the like.

Other than that, there wasn't much content to the game, so I can't quite tell how good potential the intended mechanics had. I did like the audiovisuals; I especially adore the main character's design. ^^

I take that the game has two levels? At least when I completed the second one, I got back to menu.

Anyway, it has been pretty fun walking around and trying to get the enemies into the shadow of the attack blocks (or the shadow shield). Took me a bit of figuring out how things work, but once I did it has been pretty nice. That said, casting the Solidify/Illuminate spells felt a bit clunky, so I preferred to walk around and get the ghosts into block shadows instead.

Nicely done, overall. ^^

The general concept is fine, but I think the game would benefit a lot from some quality-of-life tweaks. In particular, adding extra highlight to items hovered over would help a lot (might be limited to a certain distance within the candle). Also, I had trouble grabbing the books for some reason, taking away some of my precious candle time. Finally, I'd much prefer if colour options were integrated with the game UI, rather than staying hidden away in the settings.

Unless you deliberately aim for a meta-screwy gameplay, the interaction options available to the player should be properly discoverable (and if you do aim for meta-screwy gameplay, it doesn't count until you need to edit a save file somewhere, I suppose 🙃).

I feel the game needs to provide a few more hints than what it currently does; in particular, the numbers on the books where an anti-hint since they didn't really matter at all, and it wasn't clear to me I needed to spell out the name of the person on the portrait. On that note, did you know that "Son Winter" is one of valid anagrams?

I really like the art and the voicelines were a nice touch too. I feel if the mechanics were polished a bit - and maybe there would be no candle extinguishing altogether (some could be used to measure hours) - the game could become quite approachable for casual players, which going by GDD seem to be one of intended audiences.

The core concept here is neat. I figured a handful of collection range upgrades + basic attack upgrades + ring of fire + some magma fireballs for extra clearing. Ring of fire in particular is probably responsible for some 99% of enemy deaths.

One annoyance is how you can't figure out the additional elements other than with trial and lots of error, and you lose your elements in the process; the reason why Doodle God works is that you aren't really punished if you don't find out a correct element (and it also had hints). I would prefer if you didn't lose the elements or if any combination you already tried would become disabled. On that note, a UX nitpick - complex elements that ran out no longer have "Create" RMB button working for them. Either way, I found Steam/Cloud/Snow/Mud/Magma and I haven't noticed any other elements combinations, despite seemingly trying all combinations (Snow in particular seemed like a dead end).

Also, while there were quite a few upgrades, many of them boiled down to "stack an attack performed every X seconds". Aside from the general damage + fire rate upgrade and collecting radius upgrade, I'd like to see more upgrades directly affecting the player character and main attack, e.g. health restoration or chaining.

One more thing: it would be nice if triggering the upgrade screen also caused some knockback near the player, so that the player doesn't end up returning to the main game too abruptly.

The artstyle is neat and mostly clear regarding what happens in the game (though at some point the enemies swarm so much the player doesn't have particular strategic decisions left). The music is fitting. At some point the game lagged, though I think that's a given once you advance far enough in this genre (especially with web builds).

Pretty nice overall, but I feel the element upgrades system could use some extra UX love.

I like the concept here, and managed to complete all 3 puzzles; admittedly, I had to take a few rewinds to take a closer look, but eventually figured things out. I also like how later levels would have you find the right spot for the spotlight.

One annoyance would be picking up items and then dropping them; often Drop command wouldn't appear, and after a bit of mouse juggling it would appear at a position where the item would end up in a weird spot. I feel like my playthrough would be significantly shorter and smoother if not for this oddity.

The graphics were fine; too bad there was no audio. Neat entry, overall.

I lost to the cellar chaos boss, but I got a completion message anyway? I'll take it, the celestial dog already gave me a run for my money. ^^'

That's quite a lot of content; I know quite well how once you get past the core mechanics implementation adding levels/items/enemies/etc. becomes relatively fast, but still, it's quite a lot - especially with all those enemy portraits and shadows and other graphics. The tutorial might have had a tad too much text at once - would be nice if player input was permitted in the meantime, or some explanations (like matching element affinities) were pushed until they became truly relevant. Still, there were quite a lot of mechanics, and it was interesting, having to balance skills so that they target the affinity, but also don't defeat the enemy too early.

A little nitpick - I'd prefer if I could speed up the dialogue typewriter by clicking once, and then clicking again. In the first place, the typewriter effect feel pretty redundant if you interact with pre-written text, rather than speaking people. ;) But those are details.

Pretty nicely done, overall; some mechanics could be polished here and there, but overall the game has nice amount of depth and content to it. ^^

I think the concept is fine, though I find it hard to predict how shadow element changes. Sometimes it follows whatever element I use, sometimes it gets stuck on whichever element it had earlier. Other than that, the variety of spells was nice, but in practice I preferred to opt for a single-element build, in part because I couldn't tell how shadow's elements worked, and in part because it was too much thinking on top of trying to target down the enemies following me.

The gameplay felt a bit clunky - part of that may be my laptop not being the demon of the speed, so the framerate would be pretty limited? But also I felt like the second area in particular was really cramped, and on top of that I'd sometimes get stuck in a sneaky corner. Sometimes I also wouldn't see enemies that were right next to me, and there didn't seem to be auditory feedback for their melee attacks. I feel like having the game follow the third person perspective could alleviate some of these issues, as I'd be able to see nearby walls and melee attackers.

Still, I enjoyed casting spells here and there and the variety of upgrades was nice. I won't judge the assets, because by your own admission they were mostly external ones. Still, it's pretty nicely put together, I just wish the game (especially the second area) was a bit more spacious, to give better opportunities for outmaneuver the enemies.

The gameplay has an interesting general premise, though I feel it could have worked better if throw-towards-mouse behaviour was removed altogether in favour of throw-towards-player-direction. As it is, I just ended up placing the mouse in the middle of the cauldron and ignoring it for the rest of the game.

I liked the variety of potions here, although some potions' purpose was unclear (what is "Potion of Supplementary Potions") and I'd sometimes get a repeated one (e.g. "Potion of Feline Vision"). Would be nice if all 25 potions were shuffled, and then if the player manages to get the 26th they complete the game. Also, the potion name could have a brief description below it to explain how it actually benefits the player.

The balancing between the light and darkness was also nice, though I wish the ingredients-spawning appeared a liiittle closer within the ring of light (as in, half-shadow rather than complete shadow?). That, or maybe log-spawning and ingredient spawning would have their own sound effects, so I would know when to add some wood to the fire to search for a fresh ingredient?

It took a while for a new type of enemy to appear; for a moment there I thought that the hands of darkness will be the only enemy I'll encounter. I eventually survived to see the shooting enemies; they feel kind of overpowered, especially the way they start shooting as soon as they spawn, it seems. ^^'

Props for having an option to change controls - I ended up going for arrow keys + Z/X/C setup, so that one hand takes care of movement while the other does interactions. Too bad the settings don't change between games. Having a local record of the best time would also be nice.

Pretty nice game overall, though it could use some tweaking here and there to make it work better.

That's some funky game! I managed to complete all 30 levels on the second try (when the first try was me trying to figure out which part was what).

The gameplay was fairly simple and I figured the easiest way is to remember the nouns (since it's different for each ingedient) so the "phrase" to remember is significantly shorter. I really like the take on the theme here - having to perform alchemy in the shadows, integrating both theme components into the gameplay.

The graphics were pretty nice; one nitpick would be the white outline below the main character, making it seem like their body was cut in half. Music was pretty fitting, even if occasional alchemist noises distracted me a bit. ^^'

Nicely done, overall!

I quite enjoyed the concept here, took me a few tries to figure the game out. Some little bits I didn't quite realise at the start is how you can pick up two drinks at once, what's the condition for the clear bar (it's not having any leftover drinks by the way) and that the brew bonuses from previous rounds are actually permanent (I originally thought they are for the one day only). After I figured this out, things started to get smoother as I started optimising my gameplay more and more.

I feel like the brewing ingredients could use some balancing. For example, for $10 herbs giving higher tips you need to be given $2000 worth of base tips ($2010 total) for it to pay for itself. Also, the species-specific bonuses increasing DoT had a price barely more efficient for its specific species and didn't affect others. So in the end, I kept spending my money on Transylvania GNS, because it would take over 10 levels before the money bonuses would pay for themselves, and most problems didn't come from serving speed but the bar being occupied.

When it comes to the bartending phase, I'd suggest making annoyance bar background different from the drunkedness bar, so it's easier to tell whether someone is increasingly annoyed or increasingly drunk. Also, I noticed that the drunkedness bar is drawn higher than the annoyance bar. I feel it should be the other way round - knowing that the customer is getting more annoyed is more crucial for the player, so it's best if annoyance is what sticks out to the player. An auditory feedback (like an annoyed grunt or growl or something) when a customer is halfway annoyed would also help.

Also, it would be nice if the customer paused their annoyance when they're heading to the next free position (rather than wondering around an waiting for a spot to free up). Otherwise, an unlucky player might end up with a situation where the customer briefly enters annoyed, and then goes to the furthest possible spots so before you have any chance to give them the drink, they're already furious.

There are quite a few of the suggestions, but it's not because the game is bad, but rather because it could be made even better with a few tweaks. The game generally has been enjoyable, the artwork was nice and detailed and the music fit as well. Well done! ^^

Thank you for your response (and, uh, for other responses so far too ^^).

I understand the point about the design goal of the system, and it being primarily to compare entries against one another. My point about agency likely stems from this emphasized suggestion "participate in rating games" and generally encouraging participation. It's a very valid message, but also made it sound like it's primarily participant's responsibility to get their game above median, rather than a (closer to reality) combination of participant's involvement and out-of-control factors.

I'd like to point out there are two key aspects to the Jam experience:

- "global" aspect - what kind of games were created and whether top-ranked entries deserve their spots (since it's the top places people are mostly excited about)

- "individual" aspect - how one's entry performed, both in terms of feedback and ranking

The median measure seems focused on improving the global aspect - making sure that ratings are fairer.

Except it's a finnicky measure, because:

- You mention 6-votes entry ranking above 200-votes entry, which I presume is about the 80% (rounded-up) measure of mine; but this implies the median is 7, and 7-votes entry ranking above 200-votes entry doesn't seem like a massive improvement.

- In a recent (non-itch) Jam I participated in, there were 25 entries with votes from 19 entrants + 4 more people. Most of them ranked nearly all entries (people couldn't rank their own entry). The median-and-above entries got 20-22 votes, the below-median entries got mostly 18-19 votes (two entries got 14 and 16 votes). Also, one of the 19-voted entries was 5th out of 25, making it a relevant contender.

With the strict median measure, an entry getting 19 votes would have its score adjusted while 22-votes (most-voted entry) would not. It means that, depending on a situation 19 is deemed too unreliable vs 22, but in another Jam 7 seems reliable enough vs 200. Now, even with my proposal it would be 16-22 vs 6-200 spread, but it goes to show that median system adds extra noise - potentially near top-ranking entries, too - when all entries are voted-for almost evenly. The difference is that raw median semi-randomly punishes 11 out of 25 entries, while with my adjustment only 1 of 25 entries qualifies for score adjustment - that's one 1 less!

I guess the problem of extreme-voted entries can be tackled two-fold (maybe even both measures at once):

- Promote the high-ranking (e.g. top 5%) low-voted entries, so that more people will see them and either prove their worth or get them off their high horse. People don't even need to specifically be aware these are near-top entries (especially since temporary score isn't revealed), what matters is that they'll play, rate and verify.

It's sort of "unfair" for poorer-quality entries, but chances are already stacked against them and it can improve the quality of top rankings by whittling down the number of undeserved all-5-star outliers. And let's face it - who really minds that 6-voted entry with all 2s ranks above a 200-voted entry with mostly 2s and some 1s? - More work in this one, but with great potential to improve jam experience - streamline the voting process.

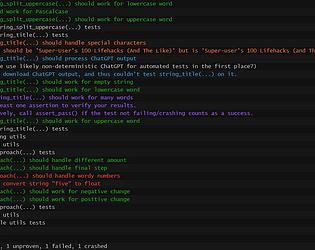

In that Jam I mentioned, we have a tool called "Jam Player". It's packaged with the ZIP of all games, and from there you can browse the entries, run their executables, write comments, sort entries etc. As the creator of the Jam player I might be blowing my own horn, but before lots of voters played only a fraction of games. Ever since introducing the Jam player, the vast majority of voters play all or nearly all entries, even when the number of entries reach 50 or so (with 80 the split between complete-played and partially-played votes was more even, but still in favour of complete-played).

I imagine similar tool for integrated voting process could work for itch.io - obviously there are lots of technical challenges between a ZIP-embedded app for a local jam and a tool handling potentially very large Jams, but with itch.io hosting all the Jam games it might be feasible (compare that with Ludum Dare and its free links). With such a player app, same people would play more entries, making the votes distributions more even and thus reliable (say, something like 16/20 vs 220 instead of 6/7 vs 200).

Perhaps I should write up a thread on the itch.io Jam Player proposal...

The 80% median seeks to improve the individual aspect - making sure it's easier to avoid the disappointment of getting score adjusted on own entry despite one's efforts

If someone cares about not getting their score adjusted and isn't a self-entitled buffoon, they'll do their best to participate and make their entry known. If someone doesn't care, then they won't really mind whether their entry gets score adjusted or not. The question is, how many people care and how many don't.

If less than 50% people care, they'll likely end up in the higher-voted half of entries. Thus, no score adjustment for them, the lower-voted half doesn't care, everything is good.

However, if more than 50% people care, there'll inevitably be some that get in the score-adjusted lower half. E.g. if 70% people cared about score adjustment, then roughly 20% would get score-adjusted despite their efforts not to. The score adjustment might not even be that much numerically, but it still can have a psychological impact like "I failed to participate enough" or "I was wronged by bad luck". I'm pretty sure it would sour the Jam experience, which goes against the notion of "the jam is the best experience possible for as many people as possible". The fact that 60-70% Ludum Dare entries end up above 20 entries threshold, and that 19/25 entrants voted in the Jam I mentioned, I'd expect in a typical jam at least half of participants would care.

Do note that in the example Jam from earlier, 9 of 19 voting entrants would get score-adjusted with 100% median system despite playing and ranking all or near-all entries. Most of that with quality feedback, too, you can hardly participate more than that. Now, I don't know how about you, but if I lost a rank or several to the score-adjustment despite playing, ranking and reviewing all entries - just because someone didn't have time to play my game and its votes count got below median - I'd be quite salty indeed.

With the 80% median system, all voting entrants would pass at the cost of 16 vs 22 variance, which isn't all that great compared to 20 vs 22 variance (the least voted entrant didn't vote).

To sum it up:

- if the votes count variance is outrageous in the first place (like 6/7 vs 200), then sticking to strict median won't help much

- if the votes count variance is relatively tame (like 18 vs 22), then using strict median adds more noise than it reduces

- provided that someone cares about score adjustment and actively participates to avoid it, the very fact of score-adjustment can souring/discouraging, even if the adjustment amount isn't all that much

- rather than adhering to strict median, the votes variance problem may be better solved by promoting high-ranked low-voted entries (so that they won't be so low-voted anymore) and increasing number-of-votes-per-person by making the voting process smoother (like the Jam Player app; this one is ambitious, though)

- with more votes-per-person and thus more even distribution of votes, we should be able to afford a leeway in the form of 80% median system

Also, thanks for the links to the historical Jams. Is there some JSON-like API that could fetch the past Jam results (entry, score, adjusted score, number of times entry was voted on) for easier computer processing? Scraping all this information from webpages might be quite time-consuming and transfer-inefficient.

I want to emphasize that avoiding the score adjustment is not a design goal of this system. The point of the adjustment is to allow entries to be relatively ranked in the bottom half by minimizing the randomness factor by scaling down scores with lower levels of confidence.

It is not, but I believe enforcing the score adjustment isn't a design goal of this system, either.

The problem with the current system is that even if everyone puts at least close-to-median-sized effort, they still might get their score adjusted semi-randomly, with some entries getting one or two ratings below a median of, say, 20 (just like rolling a 6-sided die 60 times doesn't mean all numbers will appear exactly 10 times). It can lead to a somewhat ironic situation, where the system designed to minimise the randomness factor introduces another randomness factor (i.e. which entry ends up with an adjusted score and which won't). After all, using median means that - excluding entries with exact median number of votes - the lower-voted half of entries will get its scores lowered no matter what.

Also, while the median increasing by 1 might not be significant with a median of 100 votes, the score adjustment might be more significant with a median of 20. And considering median depends on how many games can people play within voting time (as opposed to number of entries), I'd wager getting something like 10-20 median across 200 entries wouldn't be all that unusual. With medians this low, the randomness factor of score adjustment becomes particularly prominent - possibly even moreso than the few-votes variance it's designed to minimise.

Another randomness factor comes from the indirect relationship of giving-receiving - some people might get lucky and get 100% of reciprocal votes, while others might often give their feedback to people who aren't interested in voting at all. Not sure if itch.io more prominently displays entries with higher "coolness rating" (i.e. how much feedback the author gave vs how many votes their entry received); it would definitely add a stronger cause-effect in the giving-receiving relationship.

On the other hand, I imagine public voting would add some extra randomness to giving-receiving, because there's no way to vote on a public voter's entry in hopes of receiving a reciprocal vote. I suppose public voting shifts relevance away from feedback-giving to self-promotion (the higher the proportion of public voters, the more self-promotion becomes relevant compared to feedback-giving). Not really calling to remove public voting altogether, rather pointing out another reason why voting for other entries might not always be the most effective nor reliable method of getting past the median threshold.

I do not advocate for 100% entries avoiding score adjustment most of the time. I do, however, believe that if I take my time to cast a median amount of votes, I should reliably be able to avoid score adjustment (say, 95%+ of the time). Thus, among the numbers-checking for the previous Jams, it might be worth finding out how votes given correlate with votes received. In particular, how much of median I'd be guaranteed to receive 95%+ of the time if I voted on median number of entries. This could give a more fitting median multiplier than my feeling-in-the-gut 80% I initially proposed (assuming my proposal would be implemented in the first place).

My fix isn't as much meant to ensure that no-one will get their entry punished, but rather that everyone has a reasonable chance of avoiding the punishment with proper effort.

The current system is somewhat volatile in that increasing the median by 1 means that previously median-ranked games become punished. So people are sort of encouraged to get their game ranked significantly above median, which itself eventually leads to increasing the median. Also, the voting/rating other entries isn't 100% efficient - the score depends on the game's own ratings, not the author's votes, and not everyone returns the voting favour. So it further compels people to increase the median. It might help the number of votes but make it more stressful/frustrating for participants (and perhaps make them seek shortcuts by leaving lower quality votes).

My fix is meant to stabilise the system. People might aim for the 80% threshold (or maybe 90%), but then they still end up on that shaky ground. However, those who get their votes at median level are in a comfortable position - their entries can still handle extra 25% of median (e.g. increasing from 16 to 20) and don't need to rev up the median (potentially punishing other entries in process) to make sure they're on the safe side.

If we add to that:

- a clear information on game's page about a current median, threshold and what it means for the entry

- a search mode with entries voted below median (not threshold, because median is safer) sorted by author's coolness (to add extra cause-and-effect between voting for other entries and getting own entry voted)

then everyone should be able to safely avoid punishment by putting roughly a median-sized effort. And given how active some voters can get - note that a voter can single-handedly increase the median by 1 by voting for all entries - median-sized effort is by no means insignificant.

How about keeping the median as a point of reference, but making the no-penalty threshold slightly lower?

For example, 80% of median (rounded up) as opposed to 100% of median. A game getting 8 votes shouldn't have much higher variance compared to a game with 10 votes, likewise a game with 80 votes rather than with 100 votes (compare it to e.g. 2 votes vs 10 votes). Getting number of votes below that threshold decreases the rating like now, but with the 80% of median as a point of reference.

With that approach, it's perfectly feasible to achieve 100% of entries without the penalty, as long as the lower half of most-voted entries stays in the 80%-100% of median range. As it is now, the entry must either be in the top half of most voted entries or get the exact same number of votes as the median in order not to get the penalty.

Also, showing the median and no-penalty threshold during the voting - and which entries have how many votes - would allow participants and voters to make an informed decision about how much votes need to be gathered/cast to keep oneself/others out of the penalty zone without overcommitting to the jam.

By still using the median as a point of reference the system keeps its scalability. By lowering the threshold to 80% the system doesn't penalise nearly all entries in the lower half of most-voted entries, and isn't so sensitive to the median changing by just 1 vote. Finally, by keeping the threshold around 80% rather than 50% or 20% of median we can still keep the ratings variance comparable between the least-voted non-penalised entry and the median-voted entry.

Thoughts?

I liked the concept and overall presentation, though I feel there could be a few more graphics effects sprinkled here and there. Also, maybe go for higher-res graphics for llama and sparkles, considering the squares are smoothly rotated anyway <- note: I prefer hi-res graphics to pixel art in general, given similar quality.

Going through one tunnel after another was pretty fun for a while, but the game never seemed to end while not offering any extra variation compared to, say, wave 5 (just going faster and with sharper "turns"). I eventually stopped at wave 17. If you were to expand upon that idea, I recommend adding extra gameplay elements to keep things fresh.

As others, I strongly recommend adding at least some form of audio; background music doesn't take long to add if you know where to search, and it can improve the experience by leaps and bounds.

Other than that, there's some decent platforming prototype going on, but very little content (at least the game wasn't packed with lots of levels featuring way too little variety). I think there's some room for improvement as far as game feel goes; maybe some knockbacks and/or player's actually moving during attacks, so that the combat doesn't feel "stiff"?

So, the core mechanics are just a basic paint-the-largest area thing. However, there are loads and loads of amusing events to keep the game interesting, though I'd prefer if large-scale events occurred only once at the beginning of point, or at least not just before the end - as it is now, a nicely painted area can go down the drain seconds before the point and then whoever is lucky enough to paint the most within these seconds will get the point.

Other than that, I really enjoyed the general aesthetic and variety of powerups. Took me 3 attempts to win on the default settings.

Tricky to learn, but I eventually figured out how to catch creatures on my own. Interesting concept, though there is room for polish in the gameplay aspect (e.g. allow using the same symbol in quick succession) and general communication (the fish-catching animation after going back to the overworld was quite inconsistent, so I wasn't even sure if I caught the fish or not).

I liked the general atmosphere and a variety of environment, even this not directly relevant to the gameplay - it really added life to the game. One potentially nice addition - have the background change between zones gradually (e.g. using merge_color) rather than abruptly. Overall, I managed to find 3 clues and all four fish and completed the game. Nicely done. ^^

Nice little arcade game, fun to play for a few sessions. I haven't quite seen this exact gameplay premise anywhere else, and all types of the powerups were a nice and helpful addition (with Super Stacker powerup being particularly satisfying). It might not have as much *depth* as, say, "Neon of the dark Realm", but it easily makes up with a *high* amount of polish. Well done. ^^

Oh, and I strongly recommend learning how to setup an online hi-scores system. It really enhances games like these.

Generally, the source code is only required for verification whether the game was actually made in GM:S 2 and doesn't need to be made public. Quoting this post:

> QUESTION: Can you clarify the situation regarding providing source code please @rmanthorp? You said you were sure it wouldn't be public, but haven't actually confirmed this, nor - if it's NOT public - what we'll have to do to let YYG see it.

> ANSWER: Yes. Not public. Anything you don't want to share don't share and when it comes to judging/confirmation we will reach out privately if we require proof of GMS2.

Since the main purpose of the source is verification that the game was made in GM:S 2 - and considering the fact that it doesn't need to be made public in the first place - I think you should be fine.

To be on the safe side, you might want to replace all the paid sound assets with silence (removing sound assets altogether could break things) and explain the situation to YoYo Games (while keeping the original to yourself). I'm sure they'll understand.

I suggest checking out the topic on GameMaker Community forums, especially this post: https://forum.yoyogames.com/index.php?threads/amaze-me-game-jam.86376/post-51671...

Relevant bit:

> I had brought this up but I think we ultimately wanted the rules light as a bit of testing ground for how we are going to be running future events. [...] With the theme in mind you are welcome to toy away with ideas or even get started. Likewise if you can re-use previous assets to fit the theme you are welcome to do so! Obviously don't get stealing things - maybe we should make that clear...

While it doesn't directly state Marketplace assets are allowed, it does mention "previous assets" which are roughly in the same category (resources made before the Jam, whether by the participant or someone else).

Also, elsewhere in that thread I summarised a few points: https://forum.yoyogames.com/index.php?threads/amaze-me-game-jam.86376/post-51673...

In particular this:

> 2. Using pre-existing assets (be it graphics, audio or code frameworks) is perfectly acceptable, as long as there's no stealing (no unlicensed use).

Ross Manthorp (representing the organizers) gave this post a like and didn't add any reply that disagrees with me, so it's reasonable to think he agreed with what I wrote.

Hope this helps. ^^