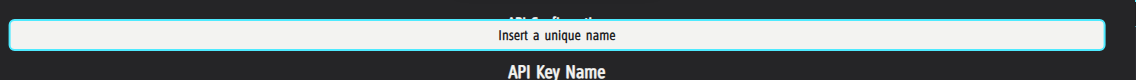

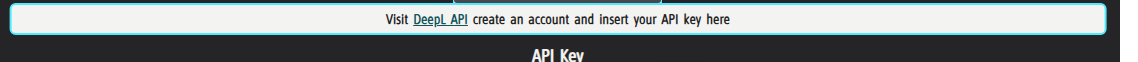

Well, I went ahead and named the key in the “API Key Name” field and pasted the key into the “API Key” field. Then I switched to another DeepL config, and surprisingly the same key showed up there, even though I had erased everything earlier. I kept jumping between the configs, and the pattern held: any change I made in one reflected in the other. If I deleted the key in one, the other one lost it too.

That persisted until I first switched from a DeepL config to a Custom API config, and then went to the other DeepL config. From that point on, they behaved separately. Now, if I delete the key in one and immediately jump to another DeepL config (without going via Custom API), the other one still keeps its key. I hope it’s really working independently now, and not secretly using the same key under the hood, but we’ll see over time.

By the way the UI request you did is spot on. It’s exactly what I was looking for. Thank you! :)