It may have been because the configs had not been properly updated yet with the new config entries. Not sure. Please do let me know if you happen to run into any more bugs like that. I'm glad it's working well now though! :)

0.5.3-alpha.3 is out now.

LLAMA again uses parallel requests per default. Should increase the speed of translations by around 40% when capturing more than a few different lines of text.

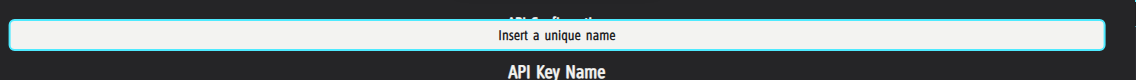

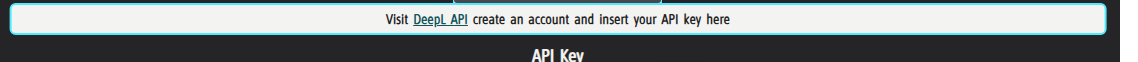

Custom API now has an option to enable parallel processing, with rate limit user configs. I managed to get translations of 30~ texts to translate 100x faster with this.

Both now have a proper way to cancel translation requests mid-inference.

Let me know if you find anyone issues with it! :)

Thank you ⭐