Interesting! I would have assumed that this is an improper request body, like no n_predict or failing end tokens. At least in my experience, not having n_predict on some models makes them continue forever until they timeout. I've never used LM Studio, but maybe it takes care of such things automatically behind the scenes?

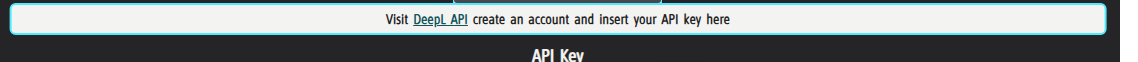

I'm considering adding another LLM translator backend that runs on GPU only and does a better job of it. Not sure which one to go with though and I need it to be relatively small.

No worries, glad it's faster for you! I'm wondering if I could somehow add a batching option too.. it'd be so dependent on the API but there may be a good way to implement it.

Thank you ༼ つ ◕_◕ ༽つ