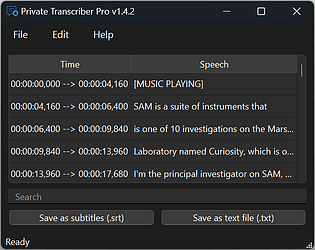

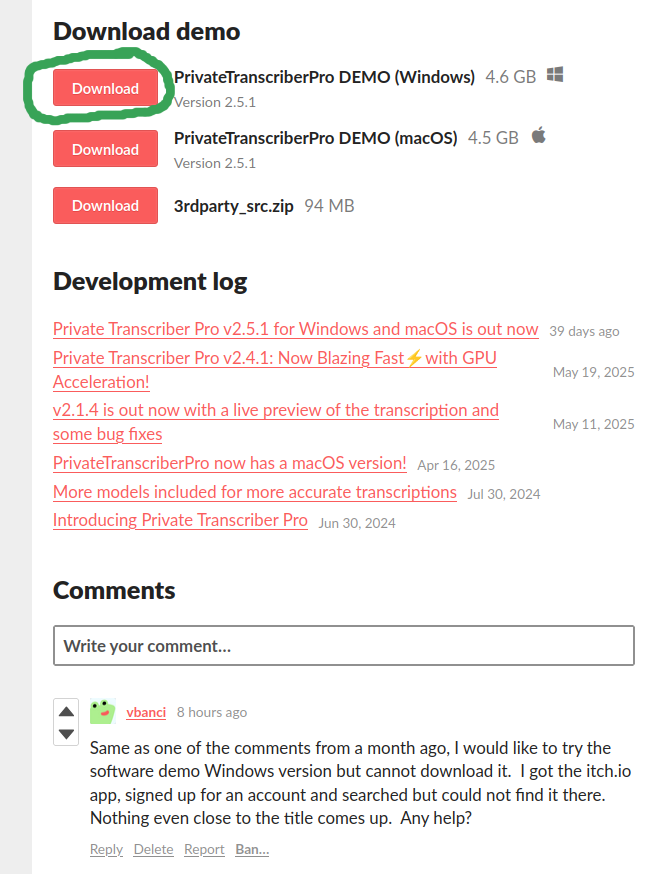

Hey, the demo works exactly in the same way that the full version works, just with some limitations.

If you tried the demo, and it didn't work, then you should not have bought it.

Also, the software works, as you can see from hundreds of happy paying customers.

You can absolutely start a refund process with itchio, but please be honest.