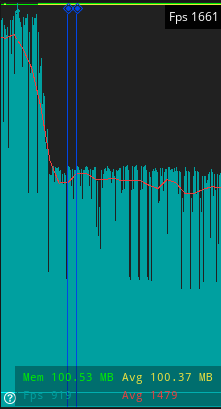

Question about optimization. I've tried using emissive particles, and it seems to be pretty inefficient, even in the example provided. If I have 7 le_light_fire at 1080p, my FPS dips below 51 fps. For context, I can run modern games (e.g., Baldur's Gate 3) on highest settings no problem on this same rig.

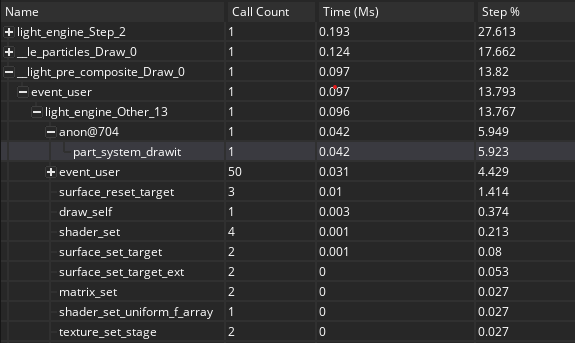

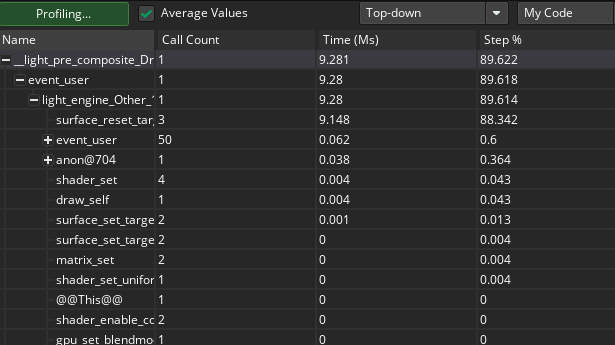

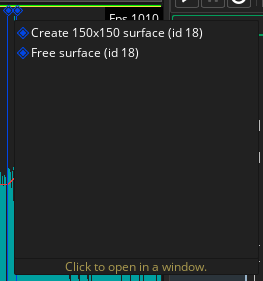

When I ran the debug it says 15% of the step was consumed by light_pre_composite, and it looks like surface reset target during light engine other 13 was consuming the highest (3%) of that. Is there a way to do particles/lighting that don't consume so much resources? Everything else runs perfectly.