The particles rely on GM's built-in particle system, so it could be limited by how efficient they have made things internally. Unfortunately, GM still has a very old graphic API implementation, and yes, you will find performance for lesser things will cost more than newer games like you mentioned.

To troubleshoot, please answer:

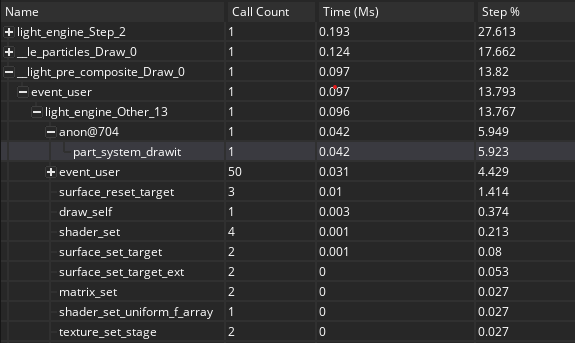

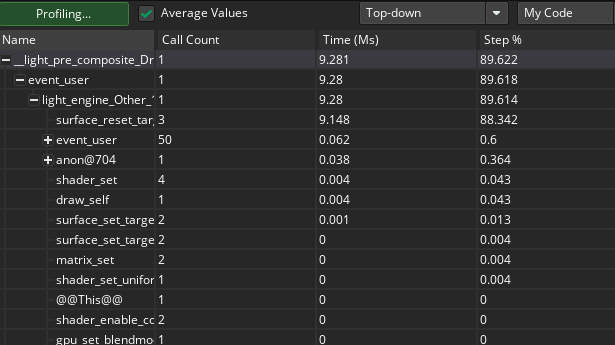

- What is the actual time in milliseconds that you can see?

- Have you ran the same test compiled with YYC? (you'd need to output your own timing or FPS values)

- What is your GPU utilization actually at when the FPS is that low? (Task Manager > Performance > GPU)

surface_reset_target() is a pipeline change, but saying that is the most costly thing is actually good, because it is not costly.

15% of the step is fine (but we really need to know milliseconds), and it is normal for rendering to cost the most performance. The question is where is the other 85% going? GM did recently change some things about particles, and there could be a lot of data going between CPU and GPU which would be slow if done poorly.