Problem: The internal Japanese-English translation model provides poor translations. DeepL is limited on the free tier, and very expensive on the Pro tier.

Possible Solution: Allow users to send translation requests to an arbitrary API endpoint to be processed by GameTranslate and overlaid onto the game window in automatic mode. Most people would use this functionality to route requests to a local webserver, but it could in principle be used to connect to a remote endpoint if it had acceptable performance.

I would want to be able to specify:

- An endpoint URL

- Metadata (request headers, etc.)

- A request format, with a special token to represent the text captured by GameTranslate (e.g. %text%)

- The JSON path of the field in the response object that represents the translated text

- Optionally, a way to filter unwanted text in the response

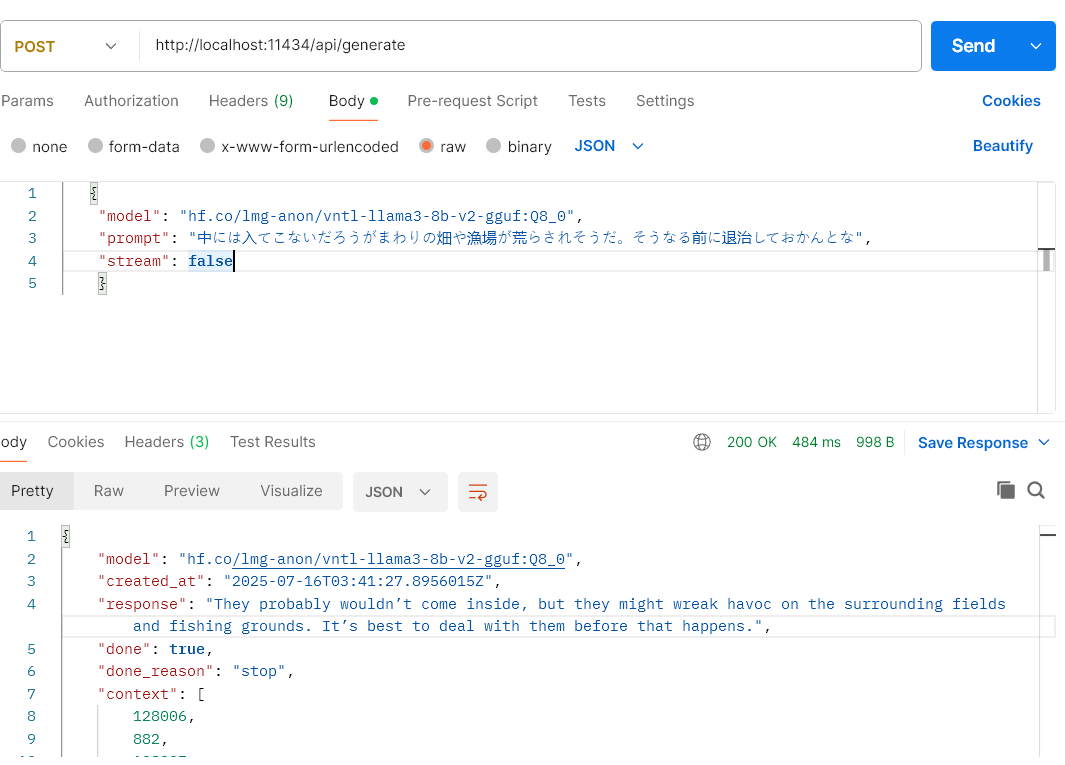

Example: This is an API call to the hf.co/lmg-anon/vntl-llama3-8b-v2-gguf:Q8_0 language model. I would like to pipe the response field into GameTranslate.

This software is pretty rad so far. I look forward to watching it evolve!