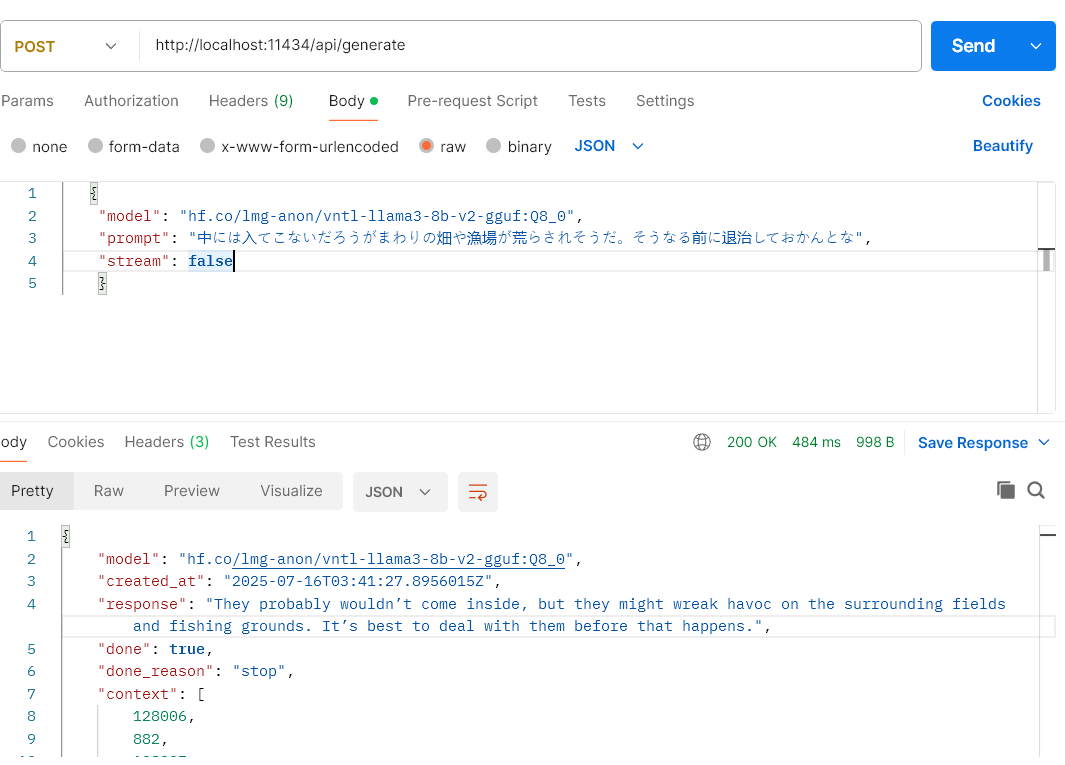

The results are surprising. Increasing the image scale factor has no effect, but reducing it noticeably improves OCR results. A scale factor of 0.25 seems to work best.

I'm running all these tests on a 4K display. Could it be that PaddleOCR doesn't do well with text that is large in terms of absolute pixel size?