I have been consulting with a colleague and very good friend about this, a neuroscience specializing in vision science.... you will gain a massive improvement in a persons susceptibility and vision stability by making the random "dot" aspect ratio longer in the vertical, so it becomes a random "strip" autostereogram"(e.g. 1 pixel wide x 5 pixel high)...there may also be ways to optimize the "randomness" using the irrational "Phi" in whatever algorithm you are using....we have discussed ways to make the image full colour with alternating and mixed RGB between both images, frame rate will be limiting... looking so much forward to seeing this develop.....

From what i can see you are using a 3x3 as a pixel....if you shift the 3x3 each frame by 2 pixels in both x and y you can achieve an effective "screen resolution". FYI 2/3 is a Fibonacci ratio... but as mentioned you will benefit from an aspect ratio longer in the vertical. Perhaps a 2Hx5V with the pixel shifting 3H and 8V each frame(again this is using Fibonacci)

I can see you are using 4? colours in your random colour...if you use 3(RGB) you should be able to implement full colour. Just split your actual colour "randomly"(phi) across both images in a "randomly" cycling manner...giving an average of the actual colour.

Full colour and full screen resolution are the easier bit.

Infinite depth is possible when each frame consists of part of an image from each depth.

At the moment you are displaying 16? depths on each frame...so display 16 shifting depths..... shift the depth of each of those depths by a fraction of 0.618(phi) of the space between each depth but only render part of each depth in a "random" distributed cycle across the screen.. so each 2x5 pixel on one frame either side of the screen has a partner(or at most a partner in the frame before of after it), but each adjacent 2x5 pixel will likely represent a different depth and each pixel on the screen is likely changing on each frame..... this should give you your "infinite" depth auto stereogram. ( limited only by the screen resolution)

Phi is irrational and will be impossible to work with so using the rational Fibonacci's will make this possible :

0, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377, 610, 987, 1597, 2584,

The stereogram consists of essentially 2 images constructed of noise that has been shifted...distribute the RGB colour across each image and across frames(across space and time) so say you want a pixel to be purple..the left and right corresponding 2x5 pixel groups might be a collection of red and blue, (#FF0000 and #0000FF) in a random distribution across the screen and across sequential frames...

The big improvement will come if you can manage to implement the depth shifting which i feel comes hand in hand with the pixel shifting...this will give you full screen resolution and infinite depth......and it negates aliasing.

Might sound hard to believe, but I had this very idea about 12 months ago so I've had some time to think about this. But we were to use idtech4 BFG and integrate other c+ physics simulators to develop and train A.I. Our principal programmer had R.L issues so we never got around to really starting. I hope you appreciate what you are about to unleash....turning every 2D screen into a 3D puppet show, the games will be one thing....the applications in real-time medical imaging.....well...let's just say you're a pioneer sir, and it's a real honor to greet you...

So DoomLoader basically ported DOOM to Unity. What I'm doing is running DoomLoader in the background (so it's still a 3D game, technically), but I overlay a UI texture across the screen. I have a script that takes the 3D camera feed, turns it into a render texture (just an array of the pixels that the camera would normally display to the player), applies the algorithm from the paper, and then feeds that to the UI texture as the end display.

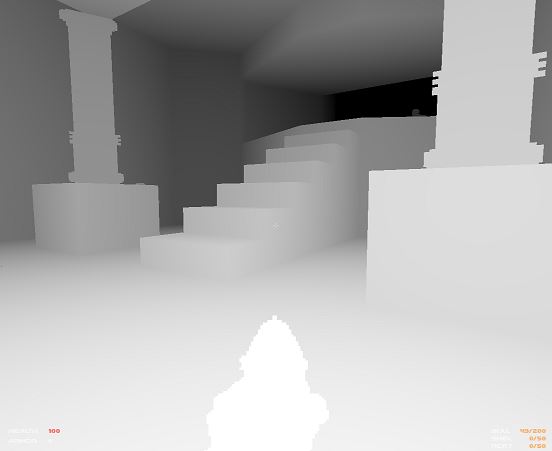

The key is that the algorithm needs a depth map. So I made a couple shaders that almost all the objects are now using, which ignore the DOOM textures and instead make all objects greyscale, depending on their distance from the player. So underneath the stereogram, the game is just playing, and every frame looks like a depth map. I'll post an image of that below, with a high "render distance" setting.

The rounding that I've mentioned is in reference to the paper, specifically line 27 if you look at the code in Figure 3 here. The equation is s = round((1-mu*z) * E/(2-mu*z)), which will make more sense if you read the rest.

okay i understand, so it converts to 4 bit grey scale..thus 16 depths.......so it needs to convert it to 8 bit, thus 256 depths...but grab every 16th layer on one frame, then shift forward one bit and grab every 16th +1 depth on the next....16th +2 on the next..and so on...that seems relatively simple....that means each 256 depths will be displayed about 4 time every second.....that sounds perfect....could implement fibonacci later but keep it simple for now i suppose.

It's not quite that simple. I'm already using full 32 bit textures, so 8 bit for the grey. Greyness corresponds to z in the algorithm (z is a value between 0 and 1, representing distance from the player). It's not the granularity of the z values, but the fact that two different z values will round to the same separation value in the algorithm.

This is just a standard way to round to the nearest int, which needs to be done because you can't have a separation of 60.5 pixels, for example, because pixels come in wholes.

So the only things I can change that would affect the layers are DPI and mu (depth of field). There are combinations of these that give a better spread of separation, but it often turns out messing up the stereogram.