Out of curiosity, does it also do NSFW stuff reliably? Haven't tried it myself yet.

MaliBogic997

Recent community posts

It is a solution to just adjust the rules to narrow it down to what you want from the AI, but it's different for everyone. Besides, some AI models might only take the exact example that you gave, assuming that you gave any. For example, you set it so that the AI can't use "and another, and another, and another...". In this case, the AI will still use "and again, and again, and again...". I've also had that issue with one AI model long ago. No matter what I set in the rules, it kept doing its' own thing.

It sounds like it's a memory issue. LM Studio has a built-in failsafe that triggers when the memory available to the language model becomes too small for the amount of data it wants to store. In this case, it trims out some of the data to make way for the new stuff. The default setting in LM Studio is that it will trim out the middle, leaving the start (typically the world rules and entities) and the end (at least the very last recorded chat) intact. The rest gets thrown out, which could explain why the AI thinks that you are at the start.

If it is that, you should try and see if you can't increase the allocated memory so that the model can remember more. Within LM Studio, when loading up the model, you should change the "Context Length" to a higher value. Maybe try doubling it for now.

You will have to apply the same number in-game under the "Max memory" setting so that Formamorph knows how much memory it has available.

Okay, the game seems to be sending an "OPTIONS" request, which isn't what should be happening. It should be "POST". At least now, we can confirm that it isn't LM Studio acting up. Formamorph itself isn't sending the request right, which is leading to these problems.

Something you could try is to reinstall the game. That might help clear it up. Before you do that though, please "download" any custom worlds that you have and want to keep. I'm fairly certain they will be deleted from the main menu when you uninstall the game.

I've gotta be honest here, I don't know of a "messages" field within LM Studio. I also just double checked and, as of LM Studio version 0.3.15, there is no messages field. I can add a system prompt, but it is by no means mandatory (and I am pretty sure it does nothing for Formamorph).

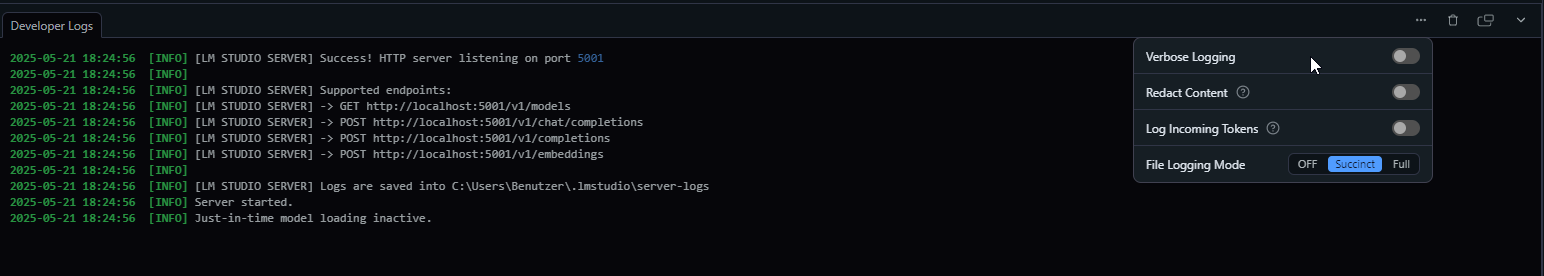

It seems like Formamorph isn't sending the data correctly, so the AI doesn't know how to interpret it. Within LM Studio, can you please turn on Verbose Logging and try another prompt? Maybe that will shed some light on where it's going wrong.

The system prompt can be used to describe to the AI what the world rules are. For example, it is always cold.

The dictionary, as far as I can tell, is a way to explain some words to the AI that it normally wouldn't be familiar with. For example, if you want to create your own race for a character with a custom name, you can set the name as the "key" and then explain what the race looks like and what they do inside of the "value".

I'm not sure where you're getting that warning, but if you want purely longer responses, you should turn up the "Max Output Tokens" within the game's endpoint settings. Additionally, you should add instructions for the AI that it should add more detail wherever possible. Also, please make sure that the "Single-Paragraph Event" setting (found under the Gameplay settings in-game) is disabled.

It could very well be the "One Paragraph" option inside of the game! If that is enabled, then the AI will be forced to deliver much shorter answers.

However, if the option is disabled and you are still getting unusually short responses, you can try changing the instructions to make the AI add a lot of detail. Here is what I have once built:

---

You are an AI for a game narrative. Given the current game world information, within two paragraphs in plaintext and separated from each other using new lines, explain the world or any currently happening event in detail to the player. The key point here is that it should feel less like a game and more like something that is actually happening. Because of this, make no mentions of this being a game or any rules that are set in place.

---

Based on some Google searches, it is most likely a false positive. HEUR detections are generally features of your antivirus that scan the code of a program and, if the code doesn't meet certain criteria, it will flag it as malware to be on the safe side. This is mostly used to find Adware or PUPs (Potentially Unwanted Programs). However, this can produce false positives since not all code that gets flagged by this is necessarily malicious. I also think that the dev wouldn't suddenly turn it malicious, especially since the source code is now made available by them.

This detection is also more prone to apply to you if you are using Avira as your antivirus software. It seems to be more common with them.

Sources that I found:

https://www.reddit.com/r/antivirus/comments/1dde6hm/got_a_heur_virus_on_my_lapto...

https://support.avira.com/hc/en-us/articles/360000819265-What-is-a-HEUR-virus-wa...

If the port is 1234, the URL should look like this:

http://localhost:1234/v1/chat/completions

No worries, your English is good! And yeah, now that you mention it like that, the ability of the AI to create custom entities based on the situation was pretty neat.

Right now, how I feel like it works, is that the AI doesn't actually manage the entity list itself. Rather, the game checks if the response contains the name of the entity in the response and then it adds that entity to the list. An update that adds something like a 4th prompt to bring back the old functionality would be really nice.

I guess, right now the only way you can work with it is to just tell the AI to "name" entities, to give them unique traits or to just make some up if the situation allows it. They probably won't show in the entity list, but it can probably be built into the narrative story at least.

While I would also like an update with hopefully some added functionality, the entities can't exactly be "fixed" with an update. The AI that you end up using as well as the entity descriptions are responsible for how the entities behave. If you wish the entities to behave differently, you must enter the world settings and edit the prompts so that it matches what you want. You can also add your own entities!

If the prompts are good, but the AI is not responding well or is just not behaving as it should, you can try using a different AI. There is a big difference in the result based on what AI model you end up using. You will have to try out a few of them to see which suits you best.

I haven't tried it myself, but a few times you need to add extra markings to a rule for the AI to prioritize it. Example:

- Player is always the species X

> Might lead to the AI forgetting and assuming that you are a human or something else

- Player is **ALWAYS** the species X

> Will prioritize this information to the AI and make it use it whenever it generates a response, so that any responses consider that you are a different species

Typically, when I want an entity to act in a specific way, I just add to the AI prompt for the AI what its' behaviour is like. It is a hassle if you have to write a lot, but unfortunately you have to be specific, otherwise the AI will make its' own decisions which might not be something that you have imagined. For example, in my own Veilwood version, I have tentacles that come out of the ground. I had to specify that they are tethered to the ground and that they can't extend or grow because the AI assumed that they can reach me on their own.

Can you post a screenshot in here with the server config and the in-game config? Also maybe turn on Verbose Logging in LM Studio so that you get more detailed information on what the AI is doing.

To turn on Verbose Logging, you need to open the three dot menu next to the Developer Logs and just toggle it on. Any attempt of a prompt afterwards will be seen there.

I personally tend to not use the 3D model because I make worlds where my characters are male or not even human (please add the option to load custom models in the desktop app FieryLion 🙏), but here is the "world rule" that I have found to be used when you load up the default slime world:

- The player's stomach will grow overtime if she gets invaded by slime

I think it's enough if you just tell the AI to increase the size of the stomach. There's gotta be something running in the background that we can't change or see that will process such changes accordingly. I'm afraid this is a case of trial and error.

The world seems really good, well done! Just a small hint: when you create a new entity, you need to make sure that the entity can appear at the location where you want it to appear. For this, you have a checklist of all of the entities that exist under each location and you can choose what you want to show up where. Currently, only the green slime is set to naturally be able to make an appearance in your world. :V

Will do, thanks! I found the "uncensored" version of Qwen2.5 7B Instruct model to work well while still offering the same feel like the original.

In fact, I might offer a few that can work. After all, as long as it runs via LM Studio and it's an Instruct model, it should work fine with the game. Whether it will output explicit content must be checked in that case.

The short answer is:

1. This is already kind of possible through the stats tab if you instruct the AI to only increase it when a slime enters the player (for example)

2. You can absolutely add your own stats! You must tell the AI to change the model's size if you wish for something like uterus size to be reflected on the 3D model, but it's doable!

The long answer, though, is that it is difficult to make stats like this. It requires a hell of a lot of instructions for the AI to remember.

I, for example, have a world or two with a stat called "Sperm inside of vagina". I think it's self-explanatory to us humans when that should be changed. However, the AI has a mind of its' own on that topic.

For whatever reason, it randomly increases the stat during any sexual encounter, no matter what the actual action is. I have added a lot of rules to the stats prompt as well as the world settings that specify that only an orgasm can increase the stat, which has helped control it by a lot, but it still does its' own thing. The latest example was that it mostly behaved well before climax, occasionally increasing the stat by a little (but whatever), but after the first one, it went absolutely haywire. Every prompt, it increased the stat by ungodly amounts, no matter what changes I have made in the stats prompt.

You will need a lot of tinkering to get it in a workable state, but it is possible. Although the suggestion is still good! It would be nice to have different ways to represent stats in-game. Maybe something like a counter to count coins, entities or really any unit that is difficult to represent with a limited stat bar.

I gave it some thought and honestly, I don't think there is really a way to force it, at least in the current state of the game. It's possible that the AI starts truncating its' memory after a while and that it's hitting the prompts, since I'm not sure if it gets the entire ruleset with every prompt made. Honestly, we need a switch in-game that forces the AI to remember the rules (or to constantly remind it) so that stuff like this doesn't happen.

To be honest, I think that the model no longer needs to be an "Instruct" model necessarily. The Instruct part was a necessity back before 1.1.0 because the game used a JSON format to control everything, but now that the game can just use plain text, any model should do the trick. The most complicated one would be for the stats, and even that is doable. I'll try out using a regular model for the game and maybe update the guide so that any generic text model can be used.

Glad to hear that it works better with the local host! I doubt it was the internet speed though, keep in mind that a lot of people use those models. Even the paid ones have their fair share of work.

If you are using LM Studio at its' default settings, it will automatically throw out the excessive memory once it nears the limit. The default setting for that is that the AI throws out the middle part, keeping the start (with the world rules) and the more recent prompts in memory.

For the weird sentence endings, you can try adding something like this to your prompt maybe? I got this from ChatGPT and it sounds like it should work.

> Only describe events, actions, and dialogue that are relevant to the player's current situation. Maintain an open-ended format.

As for the kobolds, something like this in the AI section of their entity entry should do the trick:

> The kobolds always address everybody in third person. Even when they talk about another of their kind, they use third person only.

As far as I can tell, the world rules are pretty important for the AI. Normally when I specify something there, it keeps enforcing it. For example, I have a world where the player can cast hypnotization on an entity to control it as the player wishes. In the world rules, I stated that the effect is permanent. And lo and behold, not even my override word could undo the hypnotization! The answer from the AI was pretty much confusion, and the entity remained hypnotized.

No worries! I'm glad I could help. :V

And I think I see what is going on. The AI first sees that the player's "Lust" stat can increase or decrease slowly over time, depending on the event taking place. Afterwards, you specify that the event must be sexual in some way to affect the stat.

Since the AI is first seeing the line that the stat can wander depending on the event taking place, that is most likely what the AI primarily runs on. Everything afterwards is just extra details that, with the current order, aren't really considered by the AI.

I think something like this might fit better:

---

Player's Lust stat is a special stat that can only increase if the player is in a sexual or erotic encounter. Examples would include the player masturbating or actively engaging in sex with an entity. This stat can raise up to 25 for each prompt. The maximal value of this stat cannot be raised or lowered. It is always fixed at 150 and cannot be changed.

If no sexual or otherwise erotic events are taking place, the player's Lust stat will passively decrease over time. The maximal decrease possible is 20 for each prompt.

---

A fair warning: the AI is very finnicky when it comes to managing the stats. I myself have a world that has a "Cum Meter" stat, which represents pretty much what you think. While I haven't loaded the world with the new game version yet, I previously kept having the issue that, even with strict instructions that only orgasms inside can raise the stat, the AI would decide to raise it anyway at the slightest hint of a sexual encounter. ¯\_(ツ)_/¯

So yeah, give it a try and see how it works. If that fails, we might want to use a bullet list that works like the world's rules to specify how a stat exactly works.

That's strange. What should help is to instruct the AI that it can't include any choices that lead to resetting or returning to a past encounter. Try adding this to your choices prompt, best location would probably be between "...given their current stats and location" and "Write the choices in a...":

The choices cannot instruct the player to reset any current encounters or to go back to parts of an encounter that have already happened. They must instead be focused on the current encounter and anything that may be happening around the player.