Sorry, we're having LLM provider issues. Should be resolved now?

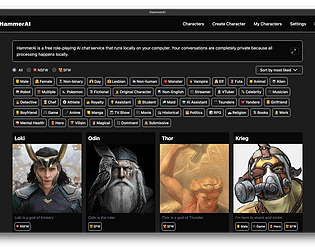

HammerAI

Creator of

Recent community posts

Thank you so much! This is really so incredibly useful. I just changed the default samplers, and will look at the rest.

If you ever do learn to code I'd be happy to hire you! Alternatively, I'd happily pay you to help improve the prompts in the app and how I set up different parts of image generation? You wouldn't need to code, we could just be on a call and look through different parts of the app? You have so much more expertise than me here, and I think it could really help out all the HammerAI users! Feel free to DM on Discord (hammer_ai) if you're interested?

Mmm perhaps, though not sure. I'm already about break-even / losing some money with HammerAI, and b/c I'm just a solo dev building this can't afford to lose as much money as I think I would if I made image gen free :( Sorry about that. But we are working on local image gen (i.e. on your computer), so that might be free. Also, you can currently run ComfyUI locally and then have HammerAI call that server, and that is free.

> honestly not seeing any changes between runing default ollama, and ollama with the following environmental parameters:

Hmm, I wonder if maybe the env vars are not being picked up? Tbh I wasn't quite sure how to test it. If you have a good idea then lmk!

> Also, SD WebUI forge is kinda dead.

Yeah, that's true. Working on adding ComfyUI next! Any other local image gen tools you think I should add?

Okay interesting. I think the new update (which is in beta right now at https://github.com/hammer-ai/hammerai/releases/tag/v0.0.206) might help.

The website is linux servers + Runpod. But it's a different codepath than the desktop app. So doing the website doesn't really help with the Electron app.

Glad to hear it's better! I really need to get this update out to users. But there is one bug I know about that before I can launch.

Linux AMD ROCm support was just in case I had any users, I wanted to make sure it was awesome for them! Glad to hear that day happened faster than I expected.

Will definitely add some more models, I'm pretty behind. Any specific suggestions? Would love to hear what the best stuff is nowadays.

I will learn more about IQ quants and the KV cache offloading. Is that suggestion for the local LLMs, or the cloud-hosted ones?

Anyways, happy it's better. If you want to chat more, I'm hammer_ai on Discord - would be fun to chat more about finetunes to add / any other suggestions you have.

Nice! I do have this if you'd like, but no need, your nice words are enough! https://www.patreon.com/HammerAI

Thanks for the feedback! So right now our Ollama version is actually really old. Does it work better if you use this beta version? It updates Ollama and should be MUCH faster: https://github.com/hammer-ai/hammerai/releases/tag/v0.0.206

Oh, so I just use the default Electron Forge makers: https://www.electronforge.io/config/makers/flatpak

I can look into putting it on flathub, but I don't have a linux machine, so just haven't actually tested any of the Linux apps myself. Sorry about that. Anything I need to fix with them?

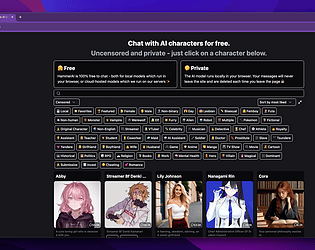

Uncensored! You can see the content policy here: https://www.hammerai.com/terms

Hi! Sorry about that, usually that's because the character you're chatting with wasn't written very well. If you try with one of these is it any better? https://www.hammerai.com/characters?tag=Featured

In terms of paywall like, it is 100% free to chat with the cloud-hosted LLM Smart Lemon Cookie 7B, or with any local LLM! But I am a solo dev building this, so I made saving chats is a paid feature, sorry about that. If it makes you feel better, I spend all the money I make to pay other contractors to help build it with me. And I have a 100% no questions asked refund policy, if you end up not being happy with it. Again, sorry for the issues.

Sorry, yes, there are paid options! But it is 100% free for unlimited chats with the Smart Lemon Cookie 7B cloud-hosted LLM, or with any locally hosted LLM. You do have to pay for saving chats, better cloud-hosted models, or image generation.

For some context, I'm just one person building this project as a side-project, and I use all the money I make to pay for GPU server renting, and to pay coders to help me out.

Sorry if the app's not for you though, I totally get it. I will say though, if you want to try out the paid features of the app, I offer a 100% refund policy, no questions asked.

Yes, it is safe! But you don't need to take my word for it, you can also ask in the Discord, or maybe read this review from someone on Reddit? They said:

> All in all it is one of the best options for a locally installed AI chatbot to use privately. Using wireshark, iftop, and other tools I didn't notice any unnecessary calls or shady traffic. Which is awesome. However, please be aware you lose some of that privacy as you need to log to discord to access basic docs for the app.

https://www.reddit.com/r/HammerAI/comments/1i2a9tp/60ish_day_review/

PS. I'm working on adding docs to the site to help address their privacy concerns (they don't like Discord)