Thanks for understanding, hope the key help a little.

GRisk

Creator of

Recent community posts

I just did a test and you are right, I was under the impression that anyone could message me on Patreon, but it seen that only Patrons can. The worse is that there is no option for me to open private message to everyone, this suck.

Well, there is also my email in any case: griskai.yt@gmail.com

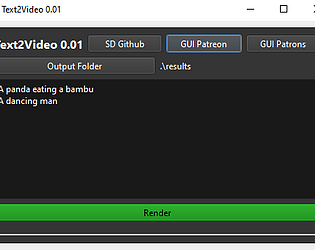

I think your question was wen 0.5 would appear in here? For now I don't have an answer, will keep developing it on Patreon and at some point it may appear here.

This license is a copy from the Stable Diffusion team, this line is just about the AI model.

Images generated by the model are explained on the second line of the license. You can use the generated images as you like, no credit needed, but if you have more questions about the license, you need to talk with the Stable Diffusion team.