Your answers are great, I'm grateful for them no matter how long they are.

Your test on the timers clarified the situation, I'm actually using them for things like jumping (doing interpolations between different gravities) and as an optimization feature (checking a condition every 0.2 seconds, for example).

This already alerts me to another project where musical synchronization is important, I need to create my own timers fed by the framerate to correct possible desynchronizations. I was entrusting this function to the standard timers.

About Debug mode, I still need to take a better look at it, for now, I use them only to read logs (to correct the flow of actions). It was a good reminder.

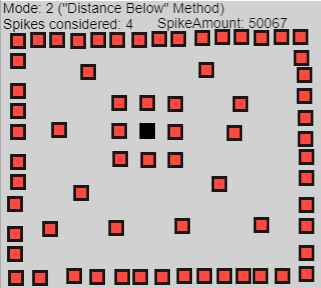

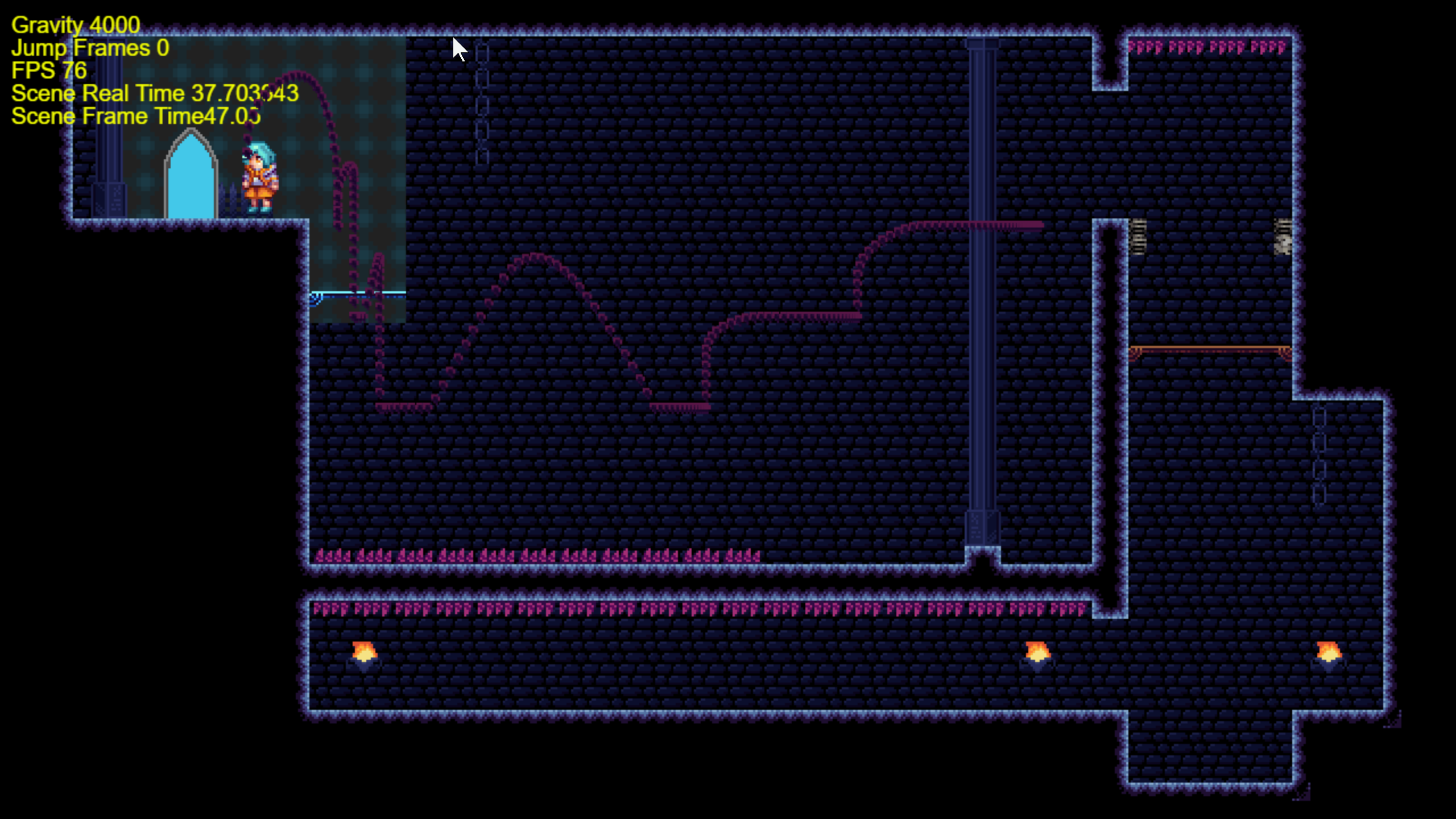

My strategy for the spikes was to select the closest one (this one I had to deactivate the prices, because it was generating bugs) and turn them into tiles (they were animated sprites).

Does the function of selecting objects by the condition "the distance between objects is less than" does it have a big impact on performance compared to your selection box? Anyway, your strategy looks good and I'll try to apply it. I'm wondering, better optimization than this, maybe just sectorize objects on the screen one step before checking the imaginary box. For example, creating 4 variations of the spike object, each being added to a specific corner of the screen. So once in a while check if "The X position of Spike >= Player.X() – 32" of all the spikes, check only those that are in a specific corner (quarter of screen), according to the position of the player. But maybe it's too much work for little performance gain.

We're having a great conversation here, but it's okay if you can't continue it :)

(I wish I could contribute some useful knowledge for you too, but I don't have the knowledge for that, sorry haha)