Play project

Physics Guided Deep Learning Interpretation's itch.io pageResults

| Criteria | Rank | Score* | Raw Score |

| Overall | #6 | 2.598 | 3.000 |

| Generality | #6 | 2.598 | 3.000 |

| Novelty | #6 | 2.309 | 2.667 |

| Topic | #6 | 2.309 | 2.667 |

| Reproducibility | #6 | 3.175 | 3.667 |

Ranked from 3 ratings. Score is adjusted from raw score by the median number of ratings per game in the jam.

Judge feedback

Judge feedback is anonymous and shown in a random order.

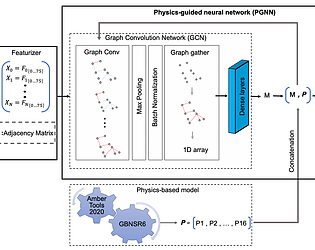

- This is a neat example of the application of a feature importance technique applied to what looks (from my non-physicist perspective) like an important problem. However, beyond the use of an XAI technique, it doesn't seem like the aim of this project is really to measure and monitor the safety of large-scale machine learning models. I'm also unclear how having access to the features in this particular application helps with safety - looking at figure 4, it seems unclear that the particular features involved give us any real insight. Nonetheless I don't mean to discourage this work, as it seems potentially interesting and valuable! Just a little off topic for this particular jam.

- Overall: The main motivation seems to be that the specific architecture the authors proposed is more likely to be interpretable and physics-based. However, the authors need to compare its interpretability against the baseline of just using a GCN, and the accuracy against the baseline of just using the physics model. Scalable oversight: the motivation (interpretability) seems to be loosely related to scalable oversight. Novelty: the idea of combining physic models and deep models seem to be interesting. I’m not an expert in GCN so I can’t comment on this. Generality: Not an expert on GCN so I can’t comment on this. Reproducibility: seem pretty easily reproducible.

What are the full names of your participants?

Ali Risheh, Mohammad Rasouli

What is your team name?

Keley

Leave a comment

Log in with itch.io to leave a comment.

Comments

No one has posted a comment yet