This jam is now over. It ran from 2023-02-10 17:00:00 to 2023-02-13 03:45:00. View results

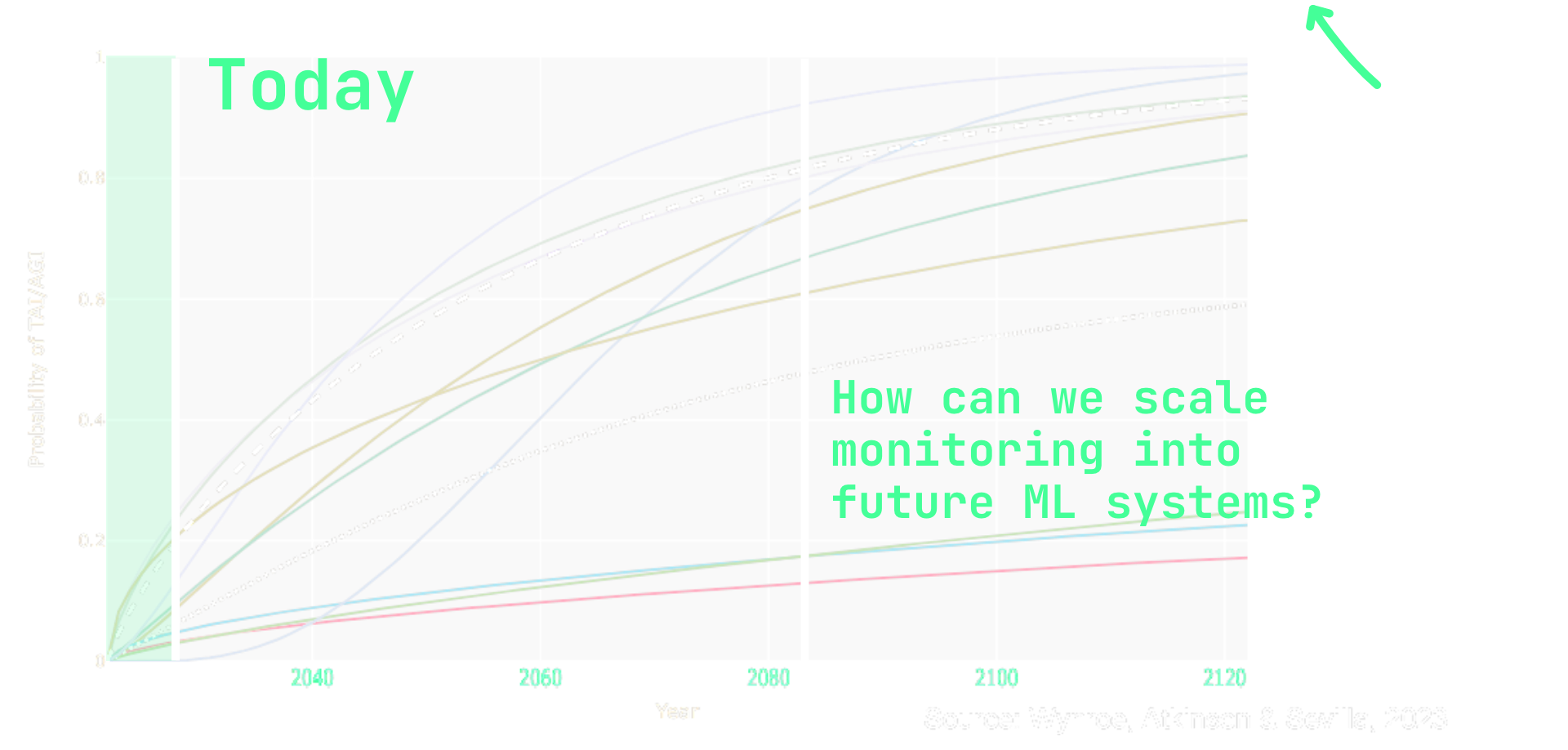

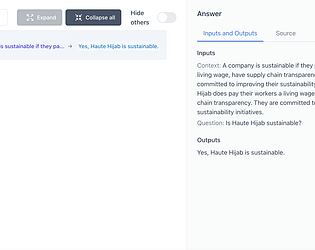

Join us for the fifth Alignment Jam where we get to spend 48 hours of intense research on how we can measure and monitor the safety of large-scale machine learning models. Work on safety benchmarks, models detecting faults in other models, self-monitoring systems , and so much else!

🏆$2,000 on the line

Measuring and monitoring safety

To make sure large machine learning models follow what we want them to do, we have to have people monitoring their safety. BUT, it is indeed very hard for just one person to monitor all the outputs of ChatGPT...

The objective of this hackathon is to research scalable solutions to this problem!

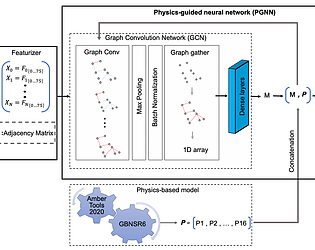

- Can we create good benchmarks that run independently of human oversight?

- Can we train AI models themselves to find faults in other models?

- Can we create ways for one human to monitor a much larger amount of data?

- Can we reduce the misgeneralization of the original model using some novel method?

These are all very interesting questions that we're excited to see your answers for during theses 48 hours!

Reading group

Join the Discord above to be a part of the reading group where we read up on the research within scaling oversight! The current pieces are:

- Measuring Progress on Scalable Oversight for Large Language Models

- Goal Misgeneralization in Deep Reinforcement Learning

- SafeBench competition example ideas

Where can I join?

Schedule

Local groups

If you are part of a local machine learning or AI safety group, you are very welcome to set up a local in-person site to work together with people on this hackathon! We will have several across the world (list upcoming) and hope to increase the amount of local spots. Sign up to run a jam site here.

You will work in groups of 2-6 people within our hackathon GatherTown and in the in-person event hubs.

Judging criteria

Everyone who submits projects will review each others' projects on the following criteria from one to five stars. Informed by these, the judges will then select the top 4 projects as winners!

| Overall | How good are your arguments for how this result informs alignment and understanding of neural networks? How informative are the results for the field of ML and AI safety in general? |

| Scalable oversight | How informative is it in the field of benchmarks and scalable oversight? Have you come up with a new method or found completely new results? |

| Novelty | Have the results not been seen before and are they surprising compared to what we expect? |

| Generality | Do your research results show a generalization of your hypothesis? E.g. if you expect language models to overvalue evidence in the prompt compared to in its training data, do you test more than just one or two different prompts and do proper interpretability analysis of the network? |

| Reproducibility | Are we able to easily reproduce the research and do we expect the results to reproduce? A high score here might be a high Generality and a well-documented Github repository that reruns all experiments. |

Inspiration

Inspiring resources for scalable oversight and ML safety:

- This lecture explains how future machine learning and AI systems might look and how we might predict emergent behaviour from large systems, something that is increasingly important in the context of scalable oversight: YouTube video link

- Watch Cambridge professor David Krueger's talk on ML safety: YouTube video link

- Watch the Center for AI Safety's Dan Hendrycks' short (10m) lecture on transparency in machine learning: YouTube video link

- Watch this lecture on Trojan neural networks, a way to study when neural networks diverge from our expectations: YouTube video link

Get notified when the intro talk stream starts on the Friday of the event!

Submissions(7)

No submissions match your filter