Deriving a Fixed State Machine from First Principles

—

This is the second post in a series documenting the development process of Your Big Year, a bird watching video game being made in Godot. While the first post explored the concept, today I’ll dive into implementation, discovering how a seemingly simple camera feature led me to fundamental programming patterns.

—

Conceptually, the system started out as binoculars. I was drawing from the real-life experience of bird watching to inform game design, even going so far as to include a “shake” mechanic the longer you were in “binocular mode”. Additionally, I hadn’t actually worked out how I was going to confirm a positive bird ID in a way that felt true to the actual bird watching experience yet(this ends up being a pretty tricky problem to solve — detective games are difficult to implement well!).

What I had initially considered was a timer that would register how long a bird was in frame while looking through the binoculars. If you were able to keep it in frame long enough, then that bird got “counted” for your life list. I knew that in order to consider my AI implementation to be successful, the movement and speed of the birds would be very, well, bird like, which is to say: birds are fast! They were going to be tough to try to keep in frame. That could be something to gameify.

But ultimately I decided this didn’t reward the parts of the experience that were supposed to be rewarding. What’s fun about actual bird watching is meeting a bird, learning its personality, observing what it gets up to in its habitat, and then IDing that bird by connecting your experience to information you find in your field guide. It makes you feel clever to use information learned in game (or, on a hike) to correctly get “the right answer”.

This “getting a closer look” mechanic should inform this identification process, not do it for you. A more naturally occurring iteration of this was simply making it a camera. The camera should be able to zoom in on a bird, capture a screenshot of what’s on the screen(snap a picture), and save that screenshot to a photo album. You should have access to the album during the hike, but also post-hike during the identification process. This still allows for the challenge of trying to keep a bird in frame when trying to take a picture, but now you’re rewarded with evidence to help you land a correct ID (if successful).

As an added structural benefit, nothing about the camera system needs to know anything about the birds programmatically or vice versa. The camera system is only responsible for being an authentic camera experience and producing an image for the game to use.

Provided snippets are in GDscript

So now that I have a better understanding of the experience I want to create, what steps make up an authentic feeling “photo shoot”? Even in a 2D context, I had plenty of examples of the nearly identical “shooting” mechanic from the FPS (first-person shooter) genre to learn from: You aim with your cursor, you right click to aim down sights where your cursor was located, and you left click to shoot.

Okay. Got it.

How do I explain this to the computer?

The first instruction was simple:

# When player presses zoom button: camera.zoom = Vector2(ZOOM_LEVEL, ZOOM_LEVEL) camera.position = cursor_position

This immediate snap to zoomed view felt jarring. Real cameras don’t work that way — there’s a moment of adjustment as you raise the camera and focus. This led me to implement a smooth transition:

func transition_to_camera_mode() -> void:

# Smoothly zoom in

var tween = create_tween()

tween.tween_property(camera, "zoom",

Vector2(ZOOM_LEVEL, ZOOM_LEVEL), ZOOM_IN_DURATION)

# Move camera to cursor position

tween.tween_property(camera, "position",

cursor_position - player.position, ZOOM_IN_DURATION)

The animation felt better, but play testing revealed an unexpected issue: using the camera repeatedly with identical zoom-in and zoom-out durations induced motion sickness. This led to an important UX insight — while cameras do take time to raise and focus, lowering them is usually a quick action. By differentiating the zoom in and zoom out durations, and making the ZOOM_OUT_DURATION nearly instantaneous, I maintained the authentic feel of raising a camera while eliminating the queasiness from repeated use.

With smooth camera movement in place, the next step was implementing the core function: capturing and saving bird photos for later identification:

func take_photo() -> void:

# Capture the current viewport

var image = get_viewport().get_texture().get_image()

# Save it to a file

image.save_png("user://photos/bird_photo.png")

But real photography isn’t just point and click. I needed visual feedback — a flash effect, a camera sound, maybe a slight pause. And I couldn’t let players spam the photo button. The simple function grew:

func take_photo() -> void:

if not can_take_photo:

return

# Play camera effects

camera_sound.play()

photo_flash.show()

# Wait for effects

await get_tree().create_timer(0.1).timeout

# Take the photo

var image = get_viewport().get_texture().get_image()

# Start cooldown

can_take_photo = false

cooldown_timer.start()

My straightforward camera feature was becoming increasingly complex, with multiple conditions and checks governing when and how photos could be taken. Take a look at the current code:

func take_photo() -> void:

# We check multiple state conditions

if current_state != CameraState.CAMERA_MODE or

not can_take_photo or

is_processing_photo:

return

# Then we manually update various state flags

is_processing_photo = true

can_take_photo = false

current_state = CameraState.TAKING_PHOTO

Every time I need to change what the camera is doing, I’m juggling multiple what-the-camera-is-doing variables. It’s like having several light switches that all need to be flipped in exactly the right order before you can even turn on a lamp. This complexity emerged because each new feature needed its own “flag” to track what’s happening:

current_state for the main camera mode

is_transitioning for handling animations (but only when switching modes)

can_take_photo for cooldown management (except during transitions)

is_processing_photo for the photo-taking sequence (unless the album is open)

is_album_open for UI state (which affects everything else)

As I wrestled with managing these camera-specific flags, I started to see a pattern emerging. Instead of toggling individual flags, I realized the camera system should transition through well-defined states.

The most revealing sign comes from the transition functions:

func transition_to_camera_mode() -> void:

if is_transitioning or is_album_open:

return

is_transitioning = true

current_state = CameraState.CAMERA_MODE

_prepare_camera_view()

var tween = create_tween()

# … handle zoom and movement …

await tween.finished

is_transitioning = false

camera_mode_changed.emit(true)

I’ve naturally created functions that manage moving between states, complete with entry and exit conditions. The pattern was forming itself through my practical needs — I just didn’t have the formal structure to express it clearly.

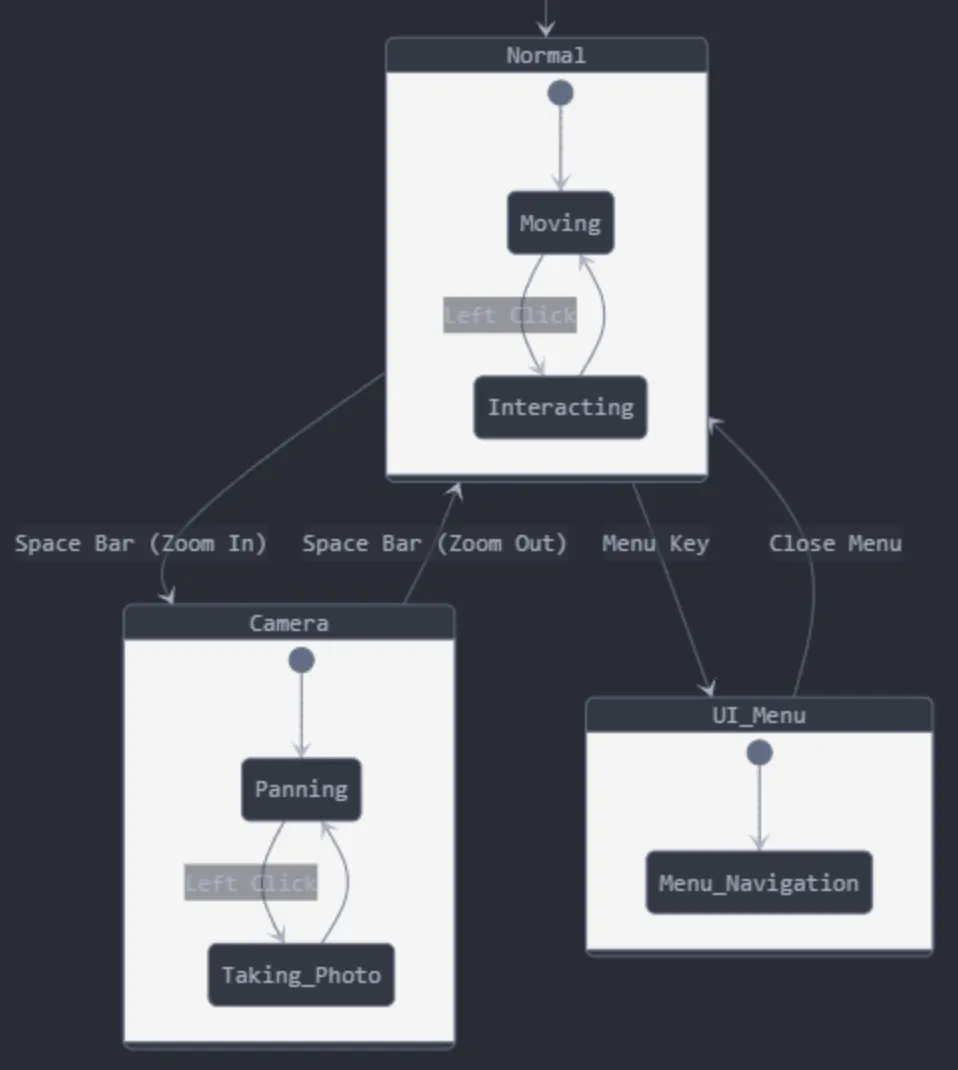

This realization changes how I see the entire system. Instead of a collection of boolean flags and if-else statements, I can envision a clear set of states, each with its own:

This architectural choice is worth highlighting: treating UI interactions as full game states rather than separate overlays. While this might seem unconventional at first, it elegantly solves a core challenge in Your Big Year: The same controls (WASD) need to mean fundamentally different things depending on what the player is doing — moving through the environment, panning a camera, navigating a photo album, or selecting menu options.

By modeling these as distinct states in the player’s state machine, I mirror the focused, contemplative nature of real bird watching. Just as a birder fully engages in either walking, observing through optics, or reviewing their field notes, the player transitions cleanly between these different modes of interaction. This approach also simplifies my input handling — rather than maintaining complex logic about how to interpret WASD in different contexts, each state encapsulates its own clear interpretation of those controls.

While this pattern might not suit an action game where players need to simultaneously move and interact with UI elements, it’s particularly appropriate for a simulation of an activity where different tasks tend to be distinct and focused. The architecture emerges naturally from the experience I’m trying to create!

— Article Referenced: Game Programming Patterns — State Pattern by Robert Nystrom

Did you like this post? Tell us

Leave a comment

Log in with your itch.io account to leave a comment.