Let's say you are making a rhythm game, something like Friday Night Funkin.

You have a sick track ready to go; you just need to make a square slide across the screen and line up with a square hole when the beat hits.

Simple right? How hard could that be?

Let's say there is a beat 2 seconds into the song that you want the square to be synced with.

We can check what the current time is in the audio player. We can lerp from the square's starting position to the hole's position. Since we know that the beat is 2 seconds in, we can create a normalized time for interpolation, t, using the formula t = time / 2.

Vector3 startPos = transform.position;

Vector3 endPos = target.transform.position;

float t = audioSource.time / 2f;

transform.position = Vector3.Lerp(startPos, endPos, t);

Easy!

Actually, this is a terrible solution.

As you can see, the top box (which is using AudioSource.time) appears to be stuttering a lot. What's up with that?

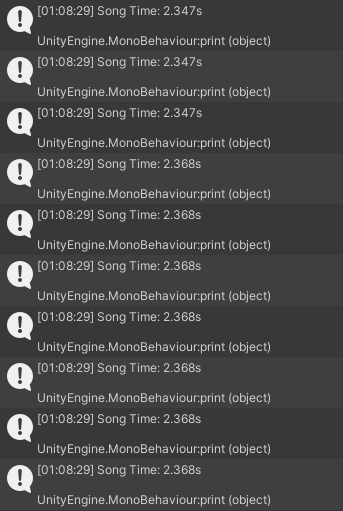

The issue with using AudioSource.time is that it doesn't update every frame. Based on my testing, it appears that it updates every 21ms to 22ms intervals. There are settings in Unity you can change to increase this update interval, but Unity's own documentation says you should not mess with those settings.

What are some other options?

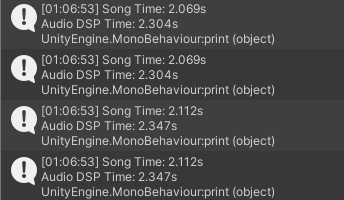

A lot of other places will tell you to use AudioSettings.dspTime. All you need to do is store the time you started and then check how much time has passed when you need to know how far into the song you are.

The problem with this approach is that the time you call AudioSource.Play() and the time the audio actually starts is different. Also, it has the same exact same issue of updating every ~21ms.

What if we use Stopwatch?

C#'s stopwatch class has insane precision, up to microsecond precision. Far better than the 21ms on the audio player.

While it has the same issue of not starting at the same time, that's not the biggest issue. You can put an offset in the reported time so that they line up, but stopwatch time and song time keep track of time in different ways. Song time uses the number of samples read into the audio buffer to check the playback time while stopwatch uses the tick count of the system. Eventually, the time these systems will report will stray from each other. This results in them being horribly out of sync.

Stopwatch drifts away from playback time by upwards of 30ms over the span of 50 seconds. If you only use short songs, and have generous timing windows, this might not be that bad.

We can do better.

My solution to the interpolation problem is to keep track of time independently of the AudioSource.time. Then, when AudioSource.time updates, store the variance.

variance = realTime - smoothedTime;

// Variance greater than 30 ms is very bad.

// We just take the L and create jitter.

if (Math.Abs(variance) > 0.03)

{

smoothedTime = realTime;

variance = 0;

}

We can't apply the variance instantly. That would lead to time being skipped, making any animations based on the smoothed time inconsistent and ugly. The way I've gotten around this is to adjust the smoothed time milliseconds per frame so that the synchronization is virtually imperceptible.

const float CLOSE_DRIFT_SPEED = 0.05f; // 50ms of correction per second.

const float FAR_DRIFT_SPEED = 0.01f; // 100ms of correction per second.

smoothedTime += Time.deltaTime;

float correction = 0;

if (variance > 0)

{

if (variance < 0.01)

correction = Time.deltaTime * CLOSE_DRIFT_SPEED;

else

correction = Time.deltaTime * FAR_DRIFT_SPEED;

}

else if (variance < 0)

{

if (variance > -0.01)

correction = -Time.deltaTime * CLOSE_DRIFT_SPEED;

else

correction = -Time.deltaTime * FAR_DRIFT_SPEED;

}

variance -= correction;

smoothedTime += correction;

This is what the time drift looks like using my method.

It works surprisingly well! Look how consistent the time drift is!

You must be thinking, "This isn't consistent at all? How is this any better?". Well, I've been calling the AudioSource.time real time, but that isn't actually true. Like I said earlier, Unity keeps track of the playback position using the number of samples sent to the audio buffer. This can be reasonably accurate if samples were sent at a consistent rate.

This is what Unity is reporting the change in time to be whenever AudioSource.time is updated.

This is how much time actually passed between the AudioSource.time updates.

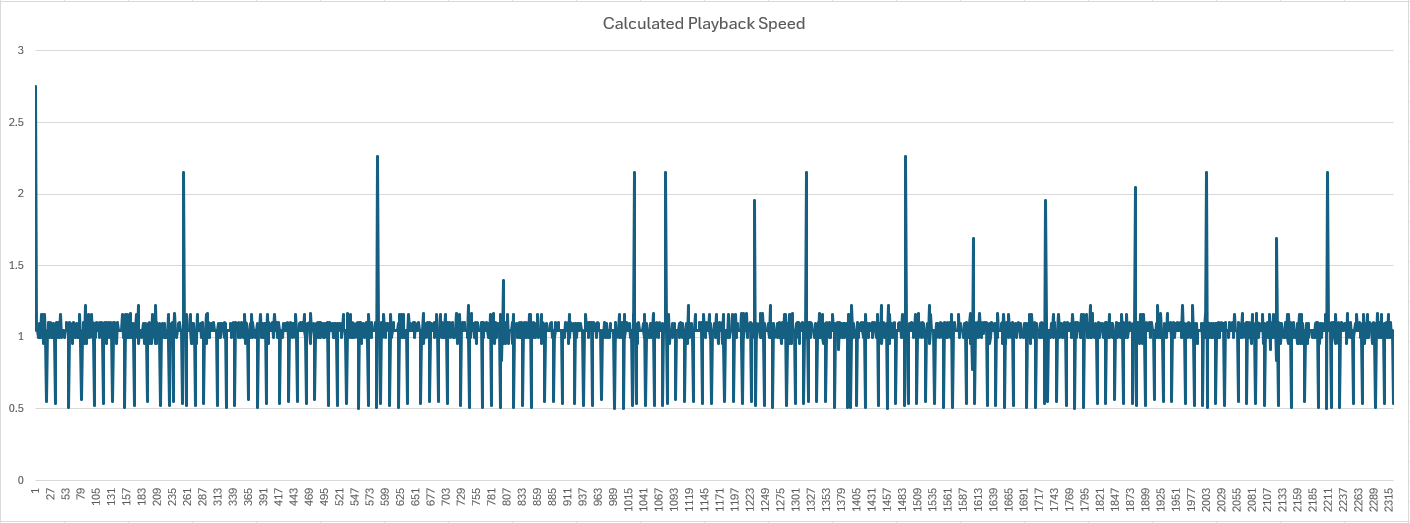

If we calculated the playback speed using the formula (reportedDelta / actualDelta), the playback speed graph would look like this.

When you're listening to the audio, it doesn't appear to randomly slow down to 0.5x and speed up to 2x, so obviously the playback speed can't be fluctuating as much as it looks.

This is what I think is actually happening (I don't know for a fact; this is pure conjecture).

When the audio system is finished with the current buffer (1024 samples by default) and moves to the next buffer (the circular buffer is 4 groups of samples long by default) Unity will begin processing the next group of samples. Sometimes it can process the samples really quickly, sometimes it takes a while.

Either way, when it finishes the samples and puts it in the circular buffer, it will increment AudioSource.timeSamples by 1024 samples (AudioSource.time is calculated via [timeSamples / AudioClip.frequency] if you were curious).

This means the actual elapsed time can be 10ms, or 40ms, but since the AudioSource.timeSamples was increased by 1024 Unity will think only 21ms has passed. This manifests in the smoothed time variance jumping up and down.

My solution to interpolating the time position isn't affected as much by the variation in sample processing speed, thus more accurately represents the current playback position in the audio.

There are some caveats with my code.

Here's the link to the code I used to test everything. It also contains the raw data I used to create the graphs you see above.

Audio-Sync-Tutorial/Assets/Scripts/Conductor.cs at master · jcub1011/Audio-Sync-Tutorial (github.com)

I greatly appreciate you reading my post, and I wish you the best of luck on your future projects!

Did you like this post? Tell us

Leave a comment

Log in with your itch.io account to leave a comment.

So, I effectively reverse engineered a linear regression. This blog post does a better job than I could explaining it: https://rhythmquestgame.com/devlog/04.htm