I wanted to talk a little about my approach to unit testing, and the small framework I've written to help me write and run tests.

I am not an expert in unit testing. I don't even give it that much priority, to be honest. I mean, sure, I recognize its usefulness in many circumstances, but I do feel that often when I come across unit test advocates, they can be way more passionate about tests than I personally feel is reasonable. Admittedly, my problem domain and my projects are relatively tiny - so my solution reflects that.

So, my approach and attitude is light and pragmatic. Get the most obvious benefits from unit tests, for the scenarios where they make the most sense only, and just not worry about the rest. If you feel more strongly about testing than I do, this is not the right solution for you - but luckily there are a lot of unit test solutions to choose from, most which does a lot more than mine.

The code for my framework, testfw.h, is written in C (but can be compiled in C++ too), and comes in the form of a stb-style single-file header-only library (this is not at all the same thing as a C++ template-based header library, worth noting). Single file libraries like these are my favorite way of packaging and reusing code, and I have structured all of my hobby development around them. There is not a lot of code, only 440 lines - but then again, it also doesn't do very much, as we will see as we go on.

------------------------------------------------------------------------------- Language files blank comment code ------------------------------------------------------------------------------- C/C++ Header 1 91 50 440 -------------------------------------------------------------------------------

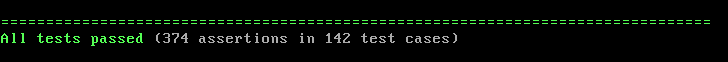

I want to start by looking at the resulting output when running tests, both successful ones and failing ones, so we get a feel for what testfw.h looks like in action. For these examples, I will use tests I wrote for my string library cstr.h. When running the tests, and they all pass, this is the output you get:

I modelled the format on some other unit test framework we were using at work at the time - I think it might have been Catch2. Pretty-printing the results is actually the main thing that testfw.h is used for. I think it clearly shows that tests did run and were successful, without being too verbose.

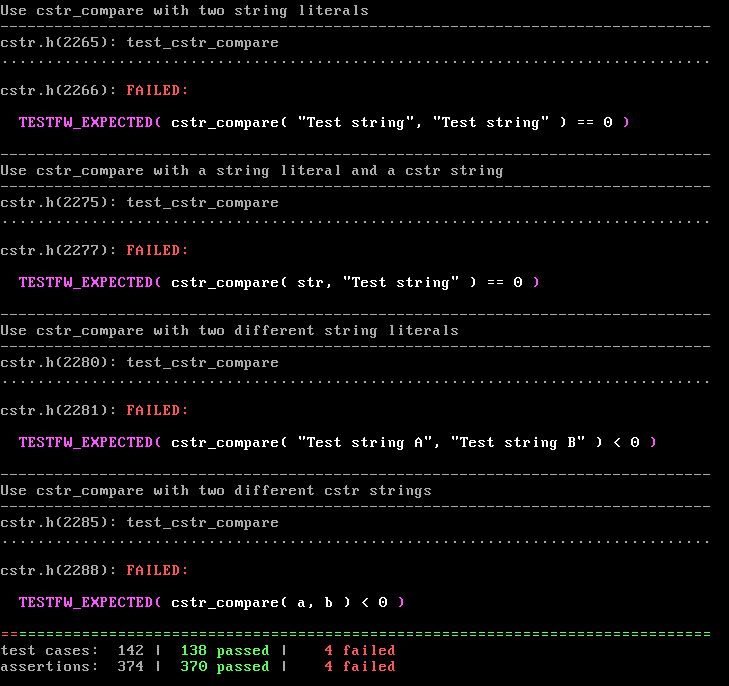

If we intentionally introduce a bug in the cstr lib, maybe in comparing strings, output might look like this, with a few failing tests:

I guess there's always going to be a balance between printing enough information and not printing enough. As we can see, it prints the description of the test on the first line. On the next line is the filename and line number of the start of the test, followed by the name of the function it is implemented in. Next comes the filename and line number where the failing check was triggered - this section will be repeated if there are multiple checks failing for the same test. For each failed check, it displays the code that triggered the fail.

At the end, it prints a summary of the number of test cases and checks being pass/fail. The bar of equal-signs gets a bit of red at the start proportional to the ratio of failed vs passed tests.

Worth noting is that we are making use of C pre-processor macros to get most of the above information without any need to do extensive markup of the code. The implementation of the first failing test in the last run looks like this:

void test_cstr_compare( void ) {

TESTFW_TEST_BEGIN( "Use cstr_compare with two string literals" );

TESTFW_EXPECTED( cstr_compare( "Test string", "Test string" ) == 0 );

TESTFW_TEST_END();

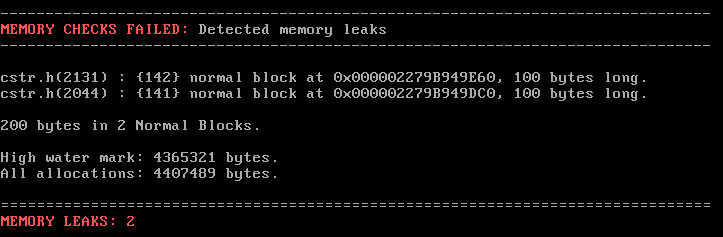

There are two additional features I want to point out. The first is that testfw.h will report memory leaks. It might look like this if I add a couple of stray 100-byte allocations that I don't clean up:

This only works on windows, and is using the built in msvc debug heap, which means it is transparent and automatic - you do not need to instrument your code, and it doesn't matter if the memory is allocated with "malloc" in C or with "new" in C++. In the report, we get the filename and line number where the allocation was done, which is usually all you need to track down a leak. It also gives an allocation number, and that can be used to add a break point when that allocation happens, if you want to use the interactive debugger to investigate. It also gives you the total memory use, which is probably not that useful, but might be interesting at times.

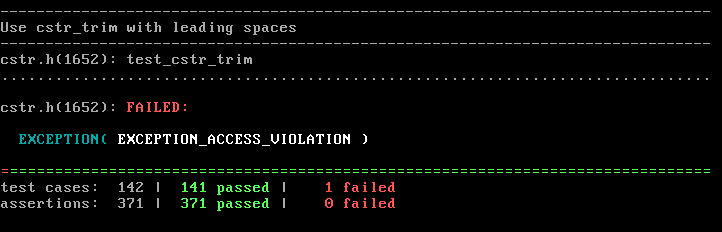

The other feature is that system exceptions are caught when running tests. This is not about C++ exceptions (testfw.h is a C lib), it is about things like detecting access violations, stack overflows and divisions by zero. This is also a windows-only feature, using the SEH (structured exception handling) feature in windows/msvc. If I put in some intentional dereferencing of NULL pointers in the tests, I might get output like this:

The structure is the same as for other errors, but we only get the line number for the start of the test, and not the line number for the specific line that caused the exception - but in practice, that's usually not a problem, as it gives you enough info to know where to set a breakpoint for debugging.

To implement tests using testfw.h, you would start with something like this:

#define TESTFW_IMPLEMENTATION

#include "testfw.h"

int main() {

TESTFW_INIT();

TESTFW_TEST_BEGIN( "Failing at basic math" );

TESTFW_EXPECTED( 1 == 0 );

TESTFW_TEST_END();

return TESTFW_SUMMARY();

}

(If you are not familiar with stb-style single-file header-only C libs, the define at the very top is specified so we get both the declarations and the implementations from testfw.h - the idea is that you only do the implementation file in one .c file, where you want the implementation, and just include the .h without a define everywhere else).

Before anything else, we need to call TESTFW_INIT(), which sets up some global state. At the very end, we call TESTFW_SUMMARY() which prints a summary for all tests we've run - it's what produces the output we've been looking at. TESTFW_SUMMARY returns the number of failed tests, so here I'm passing that on as return value from main, to have 0 failed test indicate that the tests were successful, so that if this is run as part of a build script, it can inspect the return value from the test.

In between TESTFW_INIT and TESTFW_SUMMARY you put all your tests. I've put a trivial one here, directly in main, but what I normally would do is group related tests into functions, and call all those functions from main. The tests for cstr.h looks something like this:

int main() {

TESTFW_INIT();

test_cstr();

test_cstr_n();

test_cstr_len();

test_cstr_cat();

test_cstr_format();

test_cstr_trim();

test_cstr_ltrim();

test_cstr_rtrim();

test_cstr_left();

test_cstr_right();

test_cstr_mid();

test_cstr_upper();

test_cstr_lower();

test_cstr_int();

test_cstr_float();

test_cstr_starts();

test_cstr_ends();

test_cstr_is_equal();

test_cstr_compare();

test_cstr_compare_nocase();

test_cstr_find();

test_cstr_hash();

test_cstr_tokenize();

return TESTFW_SUMMARY();

}

I know many test frameworks have mechanics that let you specify the tests and the framework will just pick them up and run them. I don't do anything like that. Listing the tests explicitly like this makes more sense to me. It also means I don't need any particular functionality for selectively disabling tests - I would just comment out a call to a test to disable it.

When I'm using testfw.h for my own single header libraries, such as cstr.h, I am experimenting with making my unit tests part of the same file as the rest of the code. So my cstr.h file will have a section at the end, surrounded by an #ifdef CSTR_RUN_TESTS define, that implements all the tests and the "main" function. To run the tests, I will do something like this:

cl /Tc cstr.h /DCSTR_IMPLEMENTATION /DCSTR_RUN_TESTS /MTd

Using the /Tc switch to treat the single header file as a C file, and specifying two defines to get the implementation part and also to get the part implementing the test. The /MTd flag specifies to use the debug runtime, which is needed for the memory leak detection to work. Then I can just run the resulting cstr.exe file to execute the tests.

I like that this allows me to keep everything for a library in a single file, and I personally like to not need to have a separate cstr_tests.c, But if you prefer the separate .c file, that's of course possible too.

The testfw.h file can be found here: testfw.h

and some example tests can be seen at the end of cstr.h, which is here: cstr.h

Did you like this post? Tell us

Leave a comment

Log in with your itch.io account to leave a comment.