Welcome to our blog post, where we'll discuss the challenges we encountered while developing the behavior of enemy movement in our game. In this post, we'll delve into the solutions we implemented to ensure that enemies move in sync with the player's chosen music.

One of the significant hurdles we faced during the game's development was determining the most suitable movement pattern for enemies. Initially, enemies moved in straight lines at constant speeds. However, this approach didn't align with our goal of creating an immersive audio reactive experience, where players could enjoy their own music while playing.

Beat Detection and Song Analysis: To tackle this challenge, we explored various options and eventually decided to leverage a tool from the Unity Asset Store called the "RhythmTool" (https://assetstore.unity.com/packages/tools/audio/rhythmtool-15679). This tool offered robust beat detection capabilities, which proved crucial in synchronizing enemy behavior with the music. Despite our initial attempts at developing our own beat detection system, we faced challenges and ultimately decided to utilize the RhythmTool's functionality.

To create a flexible enemy behavior system that catered to different difficulty levels and personal preferences for various songs, we integrated the RhythmTool into our game. By utilizing the tool, we were able to evaluate the beats per minute (BPM) of the selected song, providing us with essential data for determining the behavior of our enemies.

To guarantee that enemies behaved consistently across multiple walkthroughs of the same song, we implemented a seed value generation mechanism based on the notes played. This seed value was utilized to select points on the checkpoint planes that enemies would traverse. As a result, regardless of the inherent randomness in enemy movement, their behavior remained consistent whenever the same song was played.

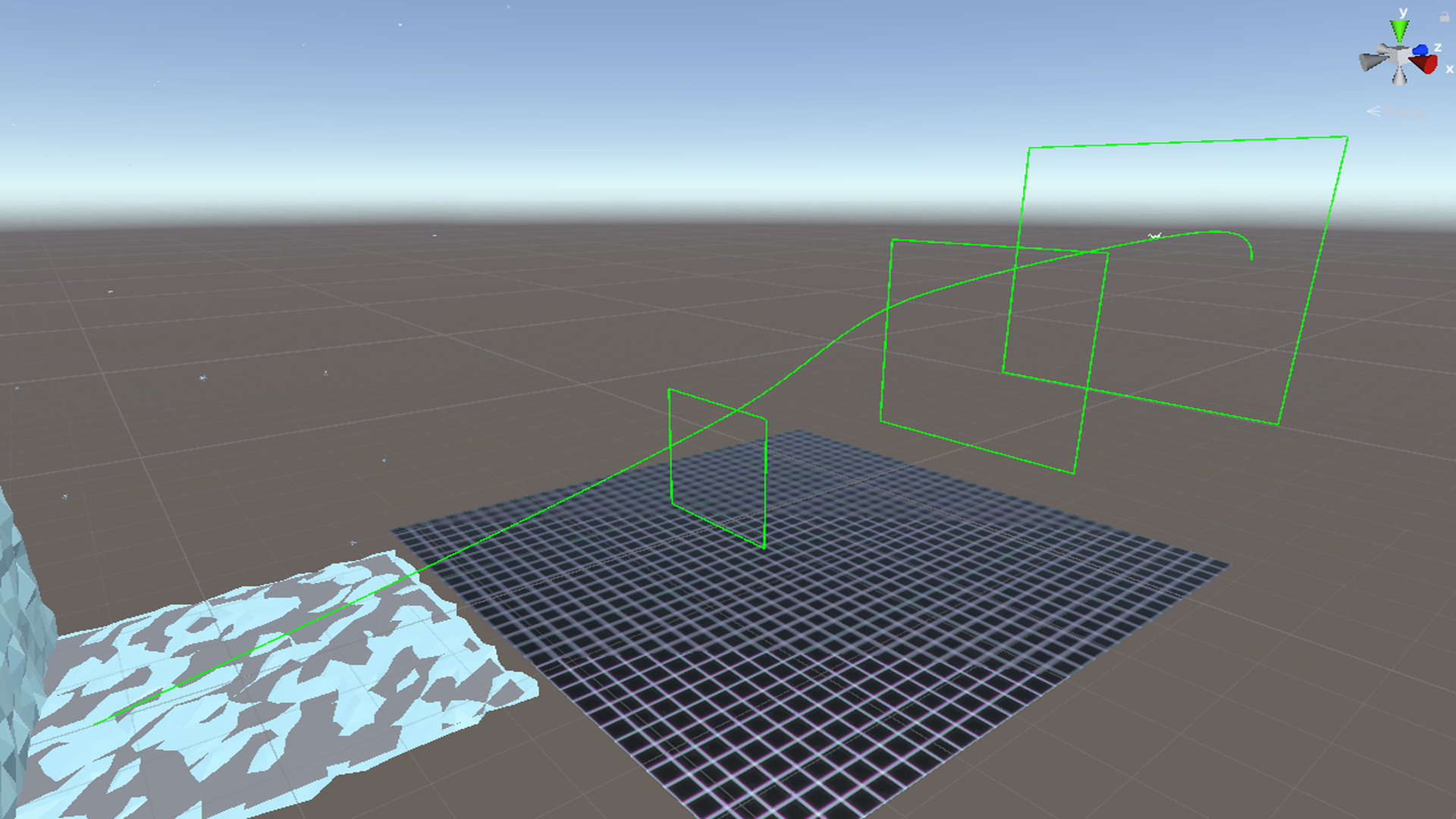

Visualization of enemy pathing

Given that enemies followed diverse paths rather than moving in straight lines, accurately estimating the time it took for each entity to reach its destination posed a challenge. To address this, we approximated the length of their paths and dynamically adjusted their speed accordingly. This approach allowed us to ensure that enemies reached the player at the expected part of the music, maintaining synchronization between gameplay and music.

In conclusion, developing the enemy AI behavior for our audio reactive game was an exciting and challenging journey. Through the integration of the RhythmTool and the implementation of seed-based checkpoint selection, we successfully achieved our goal of creating a synchronized and immersive experience for players.

We're incredibly satisfied with the results and the positive impact our enhanced enemy AI behavior has had on gameplay and musical synchronization. We hope this post has provided valuable insights into our development process and inspired fellow game developers facing similar challenges.

Thank you for reading! We appreciate your support. Christoph❤️

Did you like this post? Tell us