This jam is now over. It ran from 2023-04-14 17:00:00 to 2023-04-17 05:00:00. View results

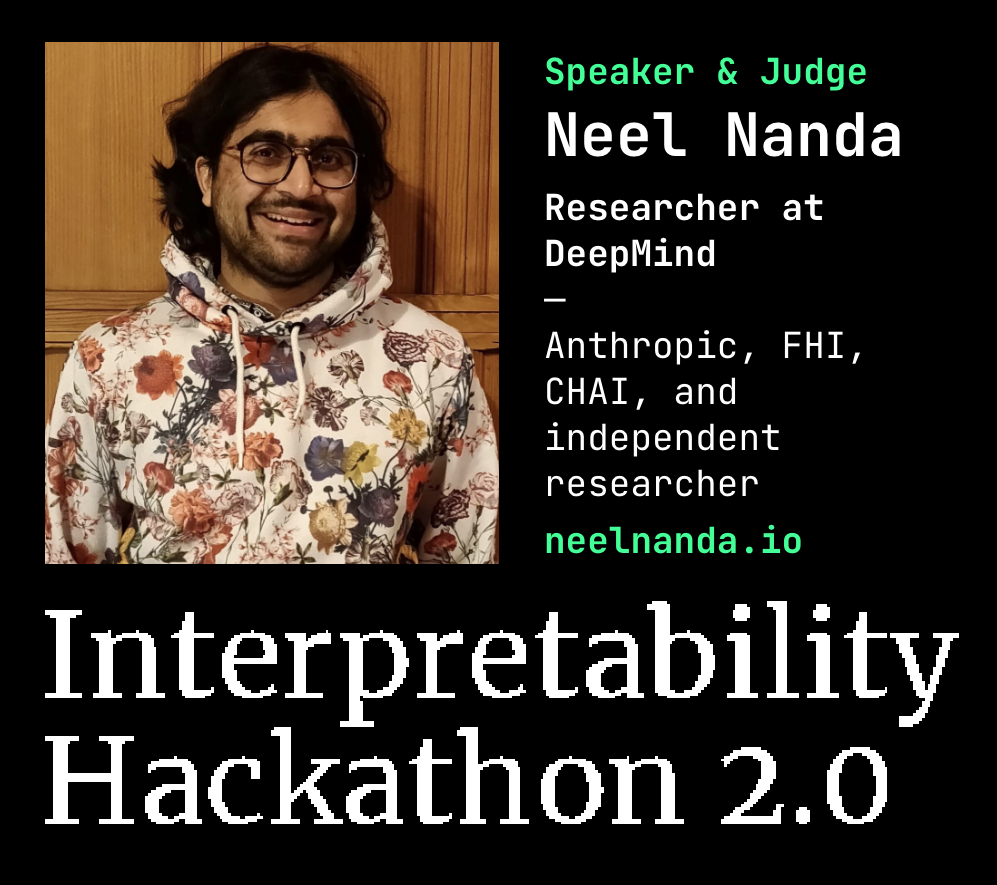

🧑🔬 Join us for this month's alignment jam! See all the starter resources here to engage with Neel Nanda's existing content.

Join this AI safety hackathon to find new perspectives on the "brains" of AI!

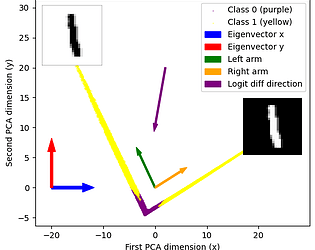

48 hours intense research in interpretability, the modern AI neuroscience.

We focus on interpreting the innards of neural networks using methods developed from mechanistic interpretability! With the starter Colab code, you should be able to quickly get a technical insight into the process.

We provide you with the best starter templates that you can work from so you can focus on creating interesting research instead of browsing Stack Overflow. You're also very welcome to check out some of the ideas already posted!

Schedule

Follow the calendar to stay up-to-date with the schedule! It kicks off with the recorded and live-streamed keynote talk by Neel Nanda Friday and ends Sunday night.

Jam Sites

If you are part of a local machine learning or AI safety group, you are very welcome to set up a local in-person site to work together with people on this hackathon! We will have several across the world and hope to increase the amount of local spots.

How to participate

Create a user on the itch.io (this) website and click participate. We will assume that you are going to participate and ask you to please cancel if you won't be part of the hackathon.

Instructions

You will work on research ideas you generate during the hackathon and you can find more inspiration below.

Submission

Everyone will help rate the submissions together on a set of criteria that we ask everyone to follow. This will happen over a 2-week period and will be open to everyone!

Each submission will be evaluated on the criteria below:

| Criterion | Description |

|---|---|

| ML Safety | How good are your arguments for how this result informs the longterm alignment and understanding of neural networks? How informative is the results for the field of ML and AI safety in general? |

| Interpretability | How informative is it in the field of interpretability? Have you come up with a new method or found revolutionary results? |

| Novelty | Have the results not been seen before and are they surprising compared to what we expect? |

| Generality | Do your research results show a generalization of your hypothesis? E.g. if you expect language models to overvalue evidence in the prompt compared to in its training data, do you test more than just one or two different prompts and do proper interpretability analysis of the network? |

| Reproducibility | Are we able to easily reproduce the research and do we expect the results to reproduce? A high score here might be a high Generality and a well-documented Github repository that reruns all experiments. |

Inspiration

We have many ideas available for inspiration on the aisi.ai Interpretability Hackathon ideas list. A lot of interpretability research is available on distill.pub, transformer circuits, and Anthropic's research page.

Introductions to mechanistic interpretability

- Neel's Quickstart Guide to Mechanistic Interpretability

- A video walkthrough of A Mathematical Framework for Transformer Circuits.

- The Transformer Circuits YouTube series

- An annotated list of good interpretability papers, along with summaries and takes on what to focus on.

- The introduction to the TransformerLens library

Digestible research

- Opinions on Interpretable Machine Learning and 70 Summaries of Recent Papers summarizes a long list of papers that is definitely useful for your interpretability projects.

- Distill publication on visualizing neural network weights

- Andrej Karpathy's "Understanding what convnets learn"

- Looking inside a neural net

- 12 toy language models designed to be easier to interpret, in the style of a Mathematical Framework for Transformer Circuits: 1, 2, 3 and 4 layer models, for each size one is attention-only, one has GeLU activations and one has SoLU activations (an activation designed to make the model's neurons more interpretable - https://transformer-circuits.pub/2022/solu/index.html) (these aren't well documented yet, but are available in TransformerLens)

- Anthropic Twitter thread going through some language model results

Below is a talk by Esben on a principled introduction to interpretability for safety:

Submissions(9)

No submissions match your filter