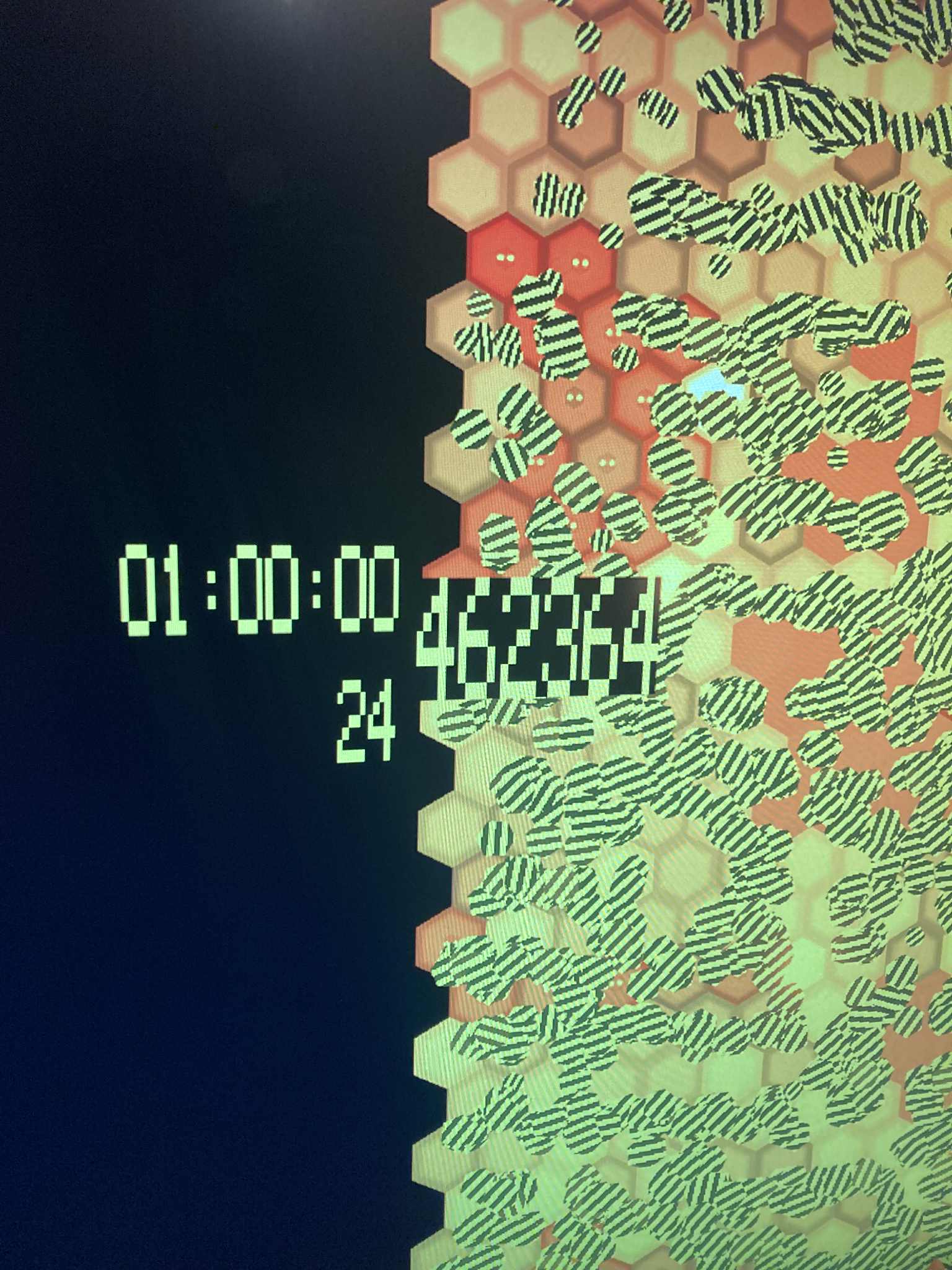

What if there was something that could unlock the power of computer shaders without taking 10 years of development from an 8 person team? Does anyone else have experience with AI agents and compute shaders. What ideas do you have to use them? I think using them for sims is a perfect match. For example could you imagine how awesome pretty much anything from Oxygen Not Included to Dwarf Fortress or even Sim City/City Skylines would be with the power of computer shaders? Let's talk about this because it's like replacing a go-kart motor(CPU) with a jet engine(GPU). I think compute shaders in sim games is long overdue and the tech is here now to do it. Does anyone have any thoughts questions or anything to keep the conversation going?