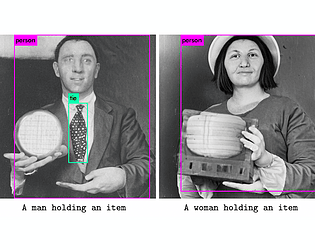

Play project

Measuring Gender Bias in Text-to-Image Models using Object Detection's itch.io pageResults

| Criteria | Rank | Score* | Raw Score |

| Overall | #13 | 4.000 | 4.000 |

| Generality | #26 | 3.500 | 3.500 |

| Topic | #35 | 3.000 | 3.000 |

| Novelty | #41 | 2.500 | 2.500 |

Ranked from 2 ratings. Score is adjusted from raw score by the median number of ratings per game in the jam.

Judge feedback

Judge feedback is anonymous.

- This is quite an interesting experiment on I2T bias. Despite the slight relevance to AI governance, it's one of the better technical works from the ideathon. Completely unrelated, the YoloV3 paper is quite possibly the best paper ever ;-) The great part seems to be its generality due to the automatic generation of images, and there might be a way to combine this both with text and images as well. The best part would be to directly get the "bias score" of the model out, something policymakers would probably be happy to see be very low (or high, depending on the design).

What are the full names of your participants?

Harvey Mannering

Which case is this for?

Custom case

Which jam site are you at?

Online

Leave a comment

Log in with itch.io to leave a comment.